上传文件至 'NEW'

This commit is contained in:

parent

bce7e3cc52

commit

c96979d2aa

233

NEW/kubernetes资源对象Service.md

Normal file

233

NEW/kubernetes资源对象Service.md

Normal file

@ -0,0 +1,233 @@

|

|||||||

|

<h1><center>Kubernetes资源对象service</center></h1>

|

||||||

|

|

||||||

|

著作:行癫 <盗版必究>

|

||||||

|

|

||||||

|

------

|

||||||

|

|

||||||

|

## 一:Service

|

||||||

|

|

||||||

|

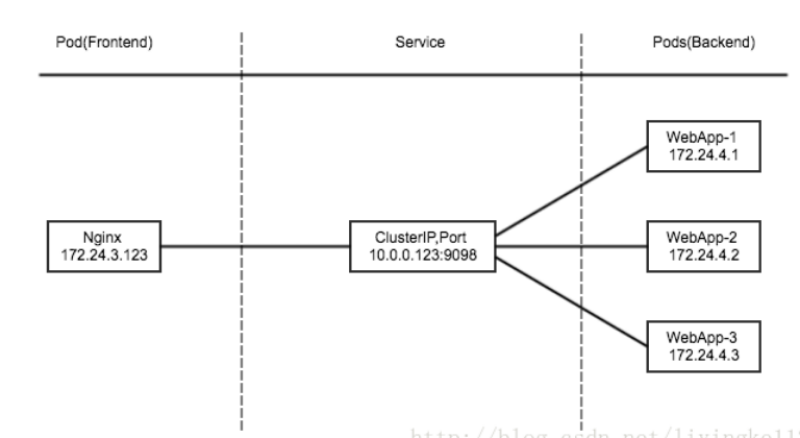

将运行在一组 [Pods](https://v1-23.docs.kubernetes.io/docs/concepts/workloads/pods/pod-overview/) 上的应用程序公开为网络服务的抽象方法

|

||||||

|

|

||||||

|

使用 Kubernetes,你无需修改应用程序即可使用不熟悉的服务发现机制;Kubernetes 为 Pods 提供自己的 IP 地址,并为一组 Pod 提供相同的 DNS 名, 并且可以在它们之间进行负载均衡

|

||||||

|

|

||||||

|

Kubernetes Service 定义了这样一种抽象:逻辑上的一组 Pod,一种可以访问它们的策略 —— 通常称为微服务

|

||||||

|

|

||||||

|

举个例子,考虑一个图片处理后端,它运行了 3 个副本。这些副本是可互换的 —— 前端不需要关心它们调用了哪个后端副本。 然而组成这一组后端程序的 Pod 实际上可能会发生变化, 前端客户端不应该也没必要知道,而且也不需要跟踪这一组后端的状态

|

||||||

|

|

||||||

|

#### 1.定义 Service

|

||||||

|

|

||||||

|

例如,假定有一组 Pod,它们对外暴露了 9376 端口,同时还被打上 `app=MyApp` 标签:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: my-service

|

||||||

|

spec:

|

||||||

|

selector:

|

||||||

|

app: MyApp

|

||||||

|

ports:

|

||||||

|

- protocol: TCP

|

||||||

|

port: 80

|

||||||

|

targetPort: 9376

|

||||||

|

```

|

||||||

|

|

||||||

|

上述配置创建一个名称为 "my-service" 的 Service 对象,它会将请求代理到使用 TCP 端口 9376,并且具有标签 `"app=MyApp"` 的 Pod 上

|

||||||

|

|

||||||

|

Kubernetes 为该服务分配一个 IP 地址(有时称为 "集群IP"),该 IP 地址由服务代理使用

|

||||||

|

|

||||||

|

注意:

|

||||||

|

|

||||||

|

Service 能够将一个接收 `port` 映射到任意的 `targetPort`。 默认情况下,`targetPort` 将被设置为与 `port` 字段相同的值

|

||||||

|

|

||||||

|

#### 2.多端口 Service

|

||||||

|

|

||||||

|

对于某些服务,你需要公开多个端口。 Kubernetes 允许你在 Service 对象上配置多个端口定义

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: my-service

|

||||||

|

spec:

|

||||||

|

selector:

|

||||||

|

app: MyApp

|

||||||

|

ports:

|

||||||

|

- name: http

|

||||||

|

protocol: TCP

|

||||||

|

port: 80

|

||||||

|

targetPort: 9376

|

||||||

|

- name: https

|

||||||

|

protocol: TCP

|

||||||

|

port: 443

|

||||||

|

targetPort: 9377

|

||||||

|

```

|

||||||

|

|

||||||

|

## 二:发布服务

|

||||||

|

|

||||||

|

#### 1.服务类型

|

||||||

|

|

||||||

|

ClusterIP

|

||||||

|

|

||||||

|

NodePort

|

||||||

|

|

||||||

|

LoadBalancer

|

||||||

|

|

||||||

|

ExternalName

|

||||||

|

|

||||||

|

#### 2.服务类型

|

||||||

|

|

||||||

|

对一些应用的某些部分(如前端),可能希望将其暴露给 Kubernetes 集群外部 的 IP 地址

|

||||||

|

|

||||||

|

Kubernetes `ServiceTypes` 允许指定你所需要的 Service 类型,默认是 `ClusterIP`

|

||||||

|

|

||||||

|

`Type` 的取值以及行为如下:

|

||||||

|

|

||||||

|

`ClusterIP`:通过集群的内部 IP 暴露服务,选择该值时服务只能够在集群内部访问。 这也是默认的 `ServiceType`

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

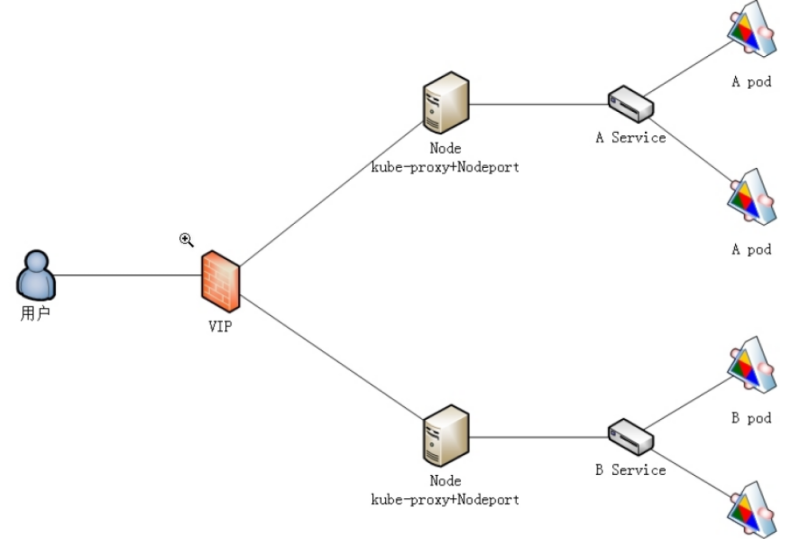

`NodePort`:通过每个节点上的 IP 和静态端口(`NodePort`)暴露服务。 `NodePort` 服务会路由到自动创建的 `ClusterIP` 服务。 通过请求 `<节点 IP>:<节点端口>`,你可以从集群的外部访问一个 `NodePort` 服务

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

[`LoadBalancer`](https://v1-23.docs.kubernetes.io/zh/docs/concepts/services-networking/service/#loadbalancer):使用云提供商的负载均衡器向外部暴露服务。 外部负载均衡器可以将流量路由到自动创建的 `NodePort` 服务和 `ClusterIP` 服务上

|

||||||

|

|

||||||

|

你也可以使用Ingress来暴露自己的服务。 Ingress 不是一种服务类型,但它充当集群的入口点。 它可以将路由规则整合到一个资源中,因为它可以在同一IP地址下公开多个服务

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master nginx]# kubectl expose deployment nginx-deployment --port=80 --type=LoadBalancer

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 3.NodePort

|

||||||

|

|

||||||

|

如果你将 `type` 字段设置为 `NodePort`,则 Kubernetes 控制平面将在 `--service-node-port-range` 标志指定的范围内分配端口(默认值:30000-32767)

|

||||||

|

|

||||||

|

例如:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: my-service

|

||||||

|

spec:

|

||||||

|

type: NodePort

|

||||||

|

selector:

|

||||||

|

app: MyApp

|

||||||

|

ports:

|

||||||

|

# 默认情况下,为了方便起见,`targetPort` 被设置为与 `port` 字段相同的值。

|

||||||

|

- port: 80

|

||||||

|

targetPort: 80

|

||||||

|

# 可选字段

|

||||||

|

# 默认情况下,为了方便起见,Kubernetes 控制平面会从某个范围内分配一个端口号(默认:30000-32767)

|

||||||

|

nodePort: 30007

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 4.案例

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: apps/v1

|

||||||

|

kind: Deployment

|

||||||

|

metadata:

|

||||||

|

name: nginx-deployment

|

||||||

|

spec:

|

||||||

|

replicas: 3

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

app: nginx

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

app: nginx

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: nginx-server

|

||||||

|

image: nginx:1.16

|

||||||

|

ports:

|

||||||

|

- containerPort: 80

|

||||||

|

```

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: nginx-services

|

||||||

|

labels:

|

||||||

|

app: nginx

|

||||||

|

spec:

|

||||||

|

type: NodePort

|

||||||

|

ports:

|

||||||

|

- port: 88

|

||||||

|

targetPort: 80

|

||||||

|

nodePort: 30010

|

||||||

|

selector:

|

||||||

|

app: nginx

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 5.了解

|

||||||

|

|

||||||

|

`ExternalName` 是 `Kubernetes` 服务(`Service`)类型中的一种,它允许你将服务映射到一个外部的 DNS 名称,而不是选择器(`selector`)所定义的一组 Pod;这意味着当你在集群内部通过服务名称访问时,实际上是在访问外部指定的资源。

|

||||||

|

|

||||||

|

使用场景

|

||||||

|

|

||||||

|

`ExternalName` 类型的服务非常适合以下几种情况:

|

||||||

|

|

||||||

|

当你需要将内部服务指向外部系统或第三方 API

|

||||||

|

|

||||||

|

当你希望服务名称和外部资源名称之间保持解耦,即使外部资源发生变化,只需要更新服务配置

|

||||||

|

|

||||||

|

对于微服务架构中的跨团队合作,不同团队管理自己的服务,通过 `ExternalName` 来互相调用对方的服务

|

||||||

|

|

||||||

|

配置方式

|

||||||

|

|

||||||

|

创建一个 `ExternalName` 类型的服务非常简单,只需在服务定义文件中设置 `spec.type` 为 `ExternalName` 并提供 `spec.externalName` 字段来指明你要映射的外部域名

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: external-service

|

||||||

|

namespace: default

|

||||||

|

spec:

|

||||||

|

type: ExternalName

|

||||||

|

externalName: example.com

|

||||||

|

```

|

||||||

|

|

||||||

|

案例

|

||||||

|

|

||||||

|

假设你正在管理一个电子商务平台,该平台由多个微服务组成,并且有一个专门处理支付的外部服务,这个支付服务是由第三方提供商托管的,其域名是 `payments.externalprovider.com`

|

||||||

|

|

||||||

|

背景

|

||||||

|

|

||||||

|

你的电商平台需要调用支付网关来完成交易过程。支付网关是一个由外部供应商提供的服务,不在你的 `Kubernetes`集群内运行,但是你的应用程序代码需要能够像调用内部服务一样方便地访问它

|

||||||

|

|

||||||

|

使用 `ExternalName` 服务

|

||||||

|

|

||||||

|

为了简化与外部支付服务的交互,你可以创建一个名为 payment-gateway 的 ExternalName 类型的服务,这样所有的内部服务就可以通过 payment-gateway.default.svc.cluster.local(假设在默认命名空间中)来访问外部的支付服务,而不需要直接硬编码外部域名

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: payment-gateway

|

||||||

|

namespace: default

|

||||||

|

spec:

|

||||||

|

type: ExternalName

|

||||||

|

externalName: payments.externalprovider.com

|

||||||

|

```

|

||||||

|

|

||||||

|

应用程序代码调整

|

||||||

|

|

||||||

|

在你的应用程序代码中,你只需要配置服务名称为 payment-gateway 或者根据集群内的 DNS 解析规则使用完整的 FQDN (Fully Qualified Domain Name) payment-gateway.default.svc.cluster.local 来发起请求。比如,在 Java Spring Boot 应用中,你可以设置 REST 客户端的基础 URL:

|

||||||

|

|

||||||

|

```java

|

||||||

|

@Bean

|

||||||

|

public RestTemplate restTemplate(RestTemplateBuilder builder) {

|

||||||

|

// 注意这里使用的是服务名称,而不是直接使用外部域名

|

||||||

|

return builder.rootUri("http://payment-gateway").build();

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

总结

|

||||||

|

|

||||||

|

通过这种方式,`ExternalName` 服务帮助你在`Kubernetes` 环境中优雅地整合了外部依赖,同时保持了良好的抽象层次和灵活性

|

||||||

141

NEW/kubernetes资源对象Volumes.md

Normal file

141

NEW/kubernetes资源对象Volumes.md

Normal file

@ -0,0 +1,141 @@

|

|||||||

|

<h1><center>Kubernetes资源对象Volumes</center></h1>

|

||||||

|

|

||||||

|

著作:行癫 <盗版必究>

|

||||||

|

|

||||||

|

------

|

||||||

|

|

||||||

|

## 一:Volumes

|

||||||

|

|

||||||

|

Container 中的文件在磁盘上是临时存放的,这给 Container 中运行的较重要的应用 程序带来一些问题。问题之一是当容器崩溃时文件丢失。kubelet 会重新启动容器, 但容器会以干净的状态重启。 第二个问题会在同一 Pod中运行多个容器并共享文件时出现。 Kubernetes 卷(Volume) 这一抽象概念能够解决这两个问题。

|

||||||

|

|

||||||

|

Docker 也有 卷(Volume) 的概念,但对它只有少量且松散的管理。 Docker 卷是磁盘上或者另外一个容器内的一个目录。 Docker 提供卷驱动程序,但是其功能非常有限。

|

||||||

|

|

||||||

|

Kubernetes 支持很多类型的卷。 Pod 可以同时使用任意数目的卷类型。 临时卷类型的生命周期与 Pod 相同,但持久卷可以比 Pod 的存活期长。 因此,卷的存在时间会超出 Pod 中运行的所有容器,并且在容器重新启动时数据也会得到保留。 当 Pod 不再存在时,卷也将不再存在。

|

||||||

|

|

||||||

|

卷的核心是包含一些数据的一个目录,Pod 中的容器可以访问该目录。 所采用的特定的卷类型将决定该目录如何形成的、使用何种介质保存数据以及目录中存放的内容。

|

||||||

|

|

||||||

|

使用卷时, 在 .spec.volumes字段中设置为 Pod 提供的卷,并在.spec.containers[*].volumeMounts字段中声明卷在容器中的挂载位置。

|

||||||

|

|

||||||

|

#### 1.cephfs

|

||||||

|

|

||||||

|

cephfs卷允许你将现存的 CephFS 卷挂载到 Pod 中。 不像emptyDir那样会在 Pod 被删除的同时也会被删除,cephfs卷的内容在 Pod 被删除 时会被保留,只是卷被卸载了。这意味着 cephfs 卷可以被预先填充数据,且这些数据可以在 Pod 之间共享。同一cephfs卷可同时被多个写者挂载。

|

||||||

|

|

||||||

|

详细使用官方链接:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

https://github.com/kubernetes/examples/tree/master/volumes/cephfs/

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.hostPath

|

||||||

|

|

||||||

|

hostPath卷能将主机节点文件系统上的文件或目录挂载到你的 Pod 中。 虽然这不是大多数 Pod 需要的,但是它为一些应用程序提供了强大的逃生舱。

|

||||||

|

|

||||||

|

| **取值** | **行为** |

|

||||||

|

| :---------------: | :----------------------------------------------------------: |

|

||||||

|

| | 空字符串(默认)用于向后兼容,这意味着在安装 hostPath 卷之前不会执行任何检查。 |

|

||||||

|

| DirectoryOrCreate | 如果在给定路径上什么都不存在,那么将根据需要创建空目录,权限设置为 0755,具有与 kubelet 相同的组和属主信息。 |

|

||||||

|

| Directory | 在给定路径上必须存在的目录。 |

|

||||||

|

| FileOrCreate | 如果在给定路径上什么都不存在,那么将在那里根据需要创建空文件,权限设置为 0644,具有与 kubelet 相同的组和所有权。 |

|

||||||

|

| File | 在给定路径上必须存在的文件。 |

|

||||||

|

| Socket | 在给定路径上必须存在的 UNIX 套接字。 |

|

||||||

|

| CharDevice | 在给定路径上必须存在的字符设备。 |

|

||||||

|

| BlockDevice | 在给定路径上必须存在的块设备。 |

|

||||||

|

|

||||||

|

hostPath 配置示例:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Pod

|

||||||

|

metadata:

|

||||||

|

name: test-pd

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- image: k8s.gcr.io/test-webserver

|

||||||

|

name: test-container

|

||||||

|

volumeMounts:

|

||||||

|

- mountPath: /test-pd

|

||||||

|

name: test-volume

|

||||||

|

volumes:

|

||||||

|

- name: test-volume

|

||||||

|

hostPath:

|

||||||

|

# 宿主上目录位置

|

||||||

|

path: /data

|

||||||

|

# 此字段为可选

|

||||||

|

type: Directory

|

||||||

|

```

|

||||||

|

|

||||||

|

案例:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: apps/v1

|

||||||

|

kind: Deployment

|

||||||

|

metadata:

|

||||||

|

name: deploy-tomcat-1

|

||||||

|

labels:

|

||||||

|

app: tomcat-1

|

||||||

|

|

||||||

|

spec:

|

||||||

|

replicas: 2

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

app: tomcat-1

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

app: tomcat-1

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: tomcat-1

|

||||||

|

image: daocloud.io/library/tomcat:8-jdk8

|

||||||

|

imagePullPolicy: IfNotPresent

|

||||||

|

ports:

|

||||||

|

- containerPort: 8080

|

||||||

|

volumeMounts:

|

||||||

|

- mountPath: /usr/local/tomcat/webapps

|

||||||

|

name: xingdian

|

||||||

|

volumes:

|

||||||

|

- name: xingdian

|

||||||

|

hostPath:

|

||||||

|

path: /opt/apps/web

|

||||||

|

type: Directory

|

||||||

|

---

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: tomcat-service-1

|

||||||

|

labels:

|

||||||

|

app: tomcat-1

|

||||||

|

spec:

|

||||||

|

type: NodePort

|

||||||

|

ports:

|

||||||

|

- port: 888

|

||||||

|

targetPort: 8080

|

||||||

|

nodePort: 30021

|

||||||

|

selector:

|

||||||

|

app: tomcat-1

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

217

NEW/基于kubeadm部署kubernetes集群.md

Normal file

217

NEW/基于kubeadm部署kubernetes集群.md

Normal file

@ -0,0 +1,217 @@

|

|||||||

|

<h1><center>基于kubeadm部署kubernetes集群</center></h1>

|

||||||

|

|

||||||

|

著作:行癫 <盗版必究>

|

||||||

|

|

||||||

|

------

|

||||||

|

|

||||||

|

## 一:环境准备

|

||||||

|

|

||||||

|

三台服务器,一台master,两台node,master节点必须是2核cpu

|

||||||

|

|

||||||

|

| 节点名称 | IP地址 |

|

||||||

|

| :------: | :--------: |

|

||||||

|

| master | 10.0.0.220 |

|

||||||

|

| node-1 | 10.0.0.221 |

|

||||||

|

| node-2 | 10.0.0.222 |

|

||||||

|

| node-3 | 10.0.0.223 |

|

||||||

|

|

||||||

|

#### 1.所有服务器关闭防火墙和selinux

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@localhost ~]# systemctl stop firewalld

|

||||||

|

[root@localhost ~]# systemctl disable firewalld

|

||||||

|

[root@localhost ~]# setenforce 0

|

||||||

|

[root@localhost ~]# sed -i '/^SELINUX=/c SELINUX=disabled/' /etc/selinux/config

|

||||||

|

[root@localhost ~]# swapoff -a 临时关闭

|

||||||

|

[root@localhost ~]# sed -i 's/.*swap.*/#&/' /etc/fstab 永久关闭

|

||||||

|

注意:

|

||||||

|

关闭所有服务器的交换分区

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.保证yum仓库可用

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@localhost ~]# yum clean all

|

||||||

|

[root@localhost ~]# yum makecache fast

|

||||||

|

注意:

|

||||||

|

使用国内yum源

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 3.修改主机名

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@localhost ~]# hostnamectl set-hostname master

|

||||||

|

[root@localhost ~]# hostnamectl set-hostname node-1

|

||||||

|

[root@localhost ~]# hostnamectl set-hostname node-2

|

||||||

|

[root@localhost ~]# hostnamectl set-hostname node-3

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 4.添加本地解析

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# cat >> /etc/hosts <<eof

|

||||||

|

10.0.0.220 master

|

||||||

|

10.0.0.221 node-1

|

||||||

|

10.0.0.222 node-2

|

||||||

|

10.0.0.223 node-3

|

||||||

|

eof

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 5.安装容器

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

|

||||||

|

[root@master ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

|

||||||

|

[root@master ~]# yum -y install docker-ce

|

||||||

|

[root@master ~]# systemctl start docker

|

||||||

|

[root@master ~]# systemctl enable docker

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 6.安装kubeadm和kubelet

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# cat >> /etc/yum.repos.d/kubernetes.repo <<eof

|

||||||

|

[kubernetes]

|

||||||

|

name=Kubernetes

|

||||||

|

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

|

||||||

|

enabled=1

|

||||||

|

gpgcheck=0

|

||||||

|

repo_gpgcheck=0

|

||||||

|

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

|

||||||

|

eof

|

||||||

|

[root@master ~]# yum -y install kubeadm kubelet kubectl ipvsadm

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

这里安装的是最新版本(也可以指定版本号:kubeadm-1.19.4)

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 7.配置kubelet的cgroups

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# cat >/etc/sysconfig/kubelet<<EOF

|

||||||

|

KUBELET_EXTRA_ARGS="--cgroup-driver=cgroupfs --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

|

||||||

|

EOF

|

||||||

|

k8s.gcr.io/pause:3.6

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 8.加载内核模块

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# modprobe br_netfilter

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 9.修改内核参数

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# cat >> /etc/sysctl.conf <<eof

|

||||||

|

net.bridge.bridge-nf-call-ip6tables = 1

|

||||||

|

net.bridge.bridge-nf-call-iptables = 1

|

||||||

|

vm.swappiness=0

|

||||||

|

eof

|

||||||

|

[root@master ~]# sysctl -p

|

||||||

|

net.bridge.bridge-nf-call-ip6tables = 1

|

||||||

|

net.bridge.bridge-nf-call-iptables = 1

|

||||||

|

vm.swappiness = 0

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

## 二:部署Kubernetes

|

||||||

|

|

||||||

|

#### 1.镜像下载

|

||||||

|

|

||||||

|

```shell

|

||||||

|

https://www.xingdiancloud.cn/index.php/s/6GyinxZwSRemHPz

|

||||||

|

注意:

|

||||||

|

下载后上传到所有节点

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.镜像导入

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# cat image_load.sh

|

||||||

|

#!/bin/bash

|

||||||

|

image_path=`pwd`

|

||||||

|

for i in `ls "${image_path}" | grep tar`

|

||||||

|

do

|

||||||

|

docker load < $i

|

||||||

|

done

|

||||||

|

[root@master ~]# bash image_load.sh

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 3.master节点初始化

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# kubeadm init --kubernetes-version=1.23.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=10.0.0.220

|

||||||

|

|

||||||

|

Your Kubernetes control-plane has initialized successfully!

|

||||||

|

|

||||||

|

To start using your cluster, you need to run the following as a regular user:

|

||||||

|

|

||||||

|

mkdir -p $HOME/.kube

|

||||||

|

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

|

||||||

|

sudo chown $(id -u):$(id -g) $HOME/.kube/config

|

||||||

|

|

||||||

|

Alternatively, if you are the root user, you can run:

|

||||||

|

|

||||||

|

export KUBECONFIG=/etc/kubernetes/admin.conf

|

||||||

|

|

||||||

|

You should now deploy a pod network to the cluster.

|

||||||

|

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

|

||||||

|

https://kubernetes.io/docs/concepts/cluster-administration/addons/

|

||||||

|

|

||||||

|

Then you can join any number of worker nodes by running the following on each as root:

|

||||||

|

|

||||||

|

kubeadm join 10.0.0.220:6443 --token mzrm3c.u9mpt80rddmjvd3g \

|

||||||

|

--discovery-token-ca-cert-hash sha256:fec53dfeacc5187d3f0e3998d65bd3e303fa64acd5156192240728567659bf4a

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 4.安装pod插件

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# wget http://www.xingdiancloud.cn:92/index.php/s/3Ad7aTxqPPja24M/download/flannel.yaml

|

||||||

|

[root@master ~]# kubectl create -f flannel.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 5.将node加入工作节点

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@node-1 ~]# kubeadm join 10.0.0.220:6443 --token mzrm3c.u9mpt80rddmjvd3g --discovery-token-ca-cert-hash sha256:fec53dfeacc5187d3f0e3998d65bd3e303fa64acd5156192240728567659bf4a

|

||||||

|

注意:

|

||||||

|

这里使用的是master初始化产生的token

|

||||||

|

这里的token时间长了会改变,需要使用命令获取,见下期内容

|

||||||

|

没有记录集群 join 命令的可以通过以下方式重新获取:

|

||||||

|

kubeadm token create --print-join-command --ttl=0

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 6.master节点查看集群状态

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# kubectl get nodes

|

||||||

|

NAME STATUS ROLES AGE VERSION

|

||||||

|

master Ready control-plane,master 26m v1.23.1

|

||||||

|

node-1 Ready <none> 4m45s v1.23.1

|

||||||

|

node-2 Ready <none> 4m40s v1.23.1

|

||||||

|

node-3 Ready <none> 4m46s v1.23.1

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

953

NEW/基于kubeadm部署kubernetes集群1-25版本.md

Normal file

953

NEW/基于kubeadm部署kubernetes集群1-25版本.md

Normal file

@ -0,0 +1,953 @@

|

|||||||

|

<h1><center>基于kubeadm部署kubernetes集群</center></h1>

|

||||||

|

|

||||||

|

著作:行癫 <盗版必究>

|

||||||

|

|

||||||

|

------

|

||||||

|

|

||||||

|

## 一:环境准备

|

||||||

|

|

||||||

|

三台服务器,一台master,两台node,master节点必须是2核cpu

|

||||||

|

|

||||||

|

| 节点名称 | IP地址 |

|

||||||

|

| :------: | :--------: |

|

||||||

|

| master | 10.0.0.220 |

|

||||||

|

| node-1 | 10.0.0.221 |

|

||||||

|

| node-2 | 10.0.0.222 |

|

||||||

|

| node-3 | 10.0.0.223 |

|

||||||

|

|

||||||

|

#### 1.所有服务器关闭防火墙和selinux

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@localhost ~]# systemctl stop firewalld

|

||||||

|

[root@localhost ~]# systemctl disable firewalld

|

||||||

|

[root@localhost ~]# setenforce 0

|

||||||

|

[root@localhost ~]# sed -i '/^SELINUX=/c SELINUX=disabled/' /etc/selinux/config

|

||||||

|

[root@localhost ~]# swapoff -a 临时关闭

|

||||||

|

[root@localhost ~]# sed -i 's/.*swap.*/#&/' /etc/fstab 永久关闭

|

||||||

|

注意:

|

||||||

|

关闭所有服务器的交换分区

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.保证yum仓库可用

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@localhost ~]# yum clean all

|

||||||

|

[root@localhost ~]# yum makecache fast

|

||||||

|

注意:

|

||||||

|

使用国内yum源

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 3.修改主机名

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@localhost ~]# hostnamectl set-hostname master

|

||||||

|

[root@localhost ~]# hostnamectl set-hostname node-1

|

||||||

|

[root@localhost ~]# hostnamectl set-hostname node-2

|

||||||

|

[root@localhost ~]# hostnamectl set-hostname node-3

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 4.添加本地解析

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# cat >> /etc/hosts <<eof

|

||||||

|

10.0.0.220 master

|

||||||

|

10.0.0.221 node-1

|

||||||

|

10.0.0.222 node-2

|

||||||

|

10.0.0.223 node-3

|

||||||

|

eof

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 5.安装容器运行时

|

||||||

|

|

||||||

|

```shell

|

||||||

|

第一步:Installing containerd

|

||||||

|

链接地址:https://github.com/containerd/containerd/blob/main/docs/getting-started.md

|

||||||

|

[root@master ~]# wget https://github.com/containerd/containerd/releases/download/v1.6.8/containerd-1.6.8-linux-amd64.tar.gz

|

||||||

|

[root@master ~]# tar xf containerd-1.6.8-linux-amd64.tar.gz

|

||||||

|

[root@master ~]# cp bin/* /usr/local/bin

|

||||||

|

创建systemctl管理服务启动文件

|

||||||

|

/usr/local/lib/systemd/system/containerd.service

|

||||||

|

/etc/systemd/system/multi-user.target.wants/containerd.service

|

||||||

|

[root@master ~]# cat containerd.service

|

||||||

|

# Copyright The containerd Authors.

|

||||||

|

#

|

||||||

|

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

# you may not use this file except in compliance with the License.

|

||||||

|

# You may obtain a copy of the License at

|

||||||

|

#

|

||||||

|

# http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

#

|

||||||

|

# Unless required by applicable law or agreed to in writing, software

|

||||||

|

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

# See the License for the specific language governing permissions and

|

||||||

|

# limitations under the License.

|

||||||

|

|

||||||

|

[Unit]

|

||||||

|

Description=containerd container runtime

|

||||||

|

Documentation=https://containerd.io

|

||||||

|

After=network.target local-fs.target

|

||||||

|

|

||||||

|

[Service]

|

||||||

|

#uncomment to enable the experimental sbservice (sandboxed) version of containerd/cri integration

|

||||||

|

#Environment="ENABLE_CRI_SANDBOXES=sandboxed"

|

||||||

|

ExecStartPre=-/sbin/modprobe overlay

|

||||||

|

ExecStart=/usr/local/bin/containerd

|

||||||

|

|

||||||

|

Type=notify

|

||||||

|

Delegate=yes

|

||||||

|

KillMode=process

|

||||||

|

Restart=always

|

||||||

|

RestartSec=5

|

||||||

|

# Having non-zero Limit*s causes performance problems due to accounting overhead

|

||||||

|

# in the kernel. We recommend using cgroups to do container-local accounting.

|

||||||

|

LimitNPROC=infinity

|

||||||

|

LimitCORE=infinity

|

||||||

|

LimitNOFILE=infinity

|

||||||

|

# Comment TasksMax if your systemd version does not supports it.

|

||||||

|

# Only systemd 226 and above support this version.

|

||||||

|

TasksMax=infinity

|

||||||

|

OOMScoreAdjust=-999

|

||||||

|

|

||||||

|

[Install]

|

||||||

|

WantedBy=multi-user.target

|

||||||

|

[root@master ~]# systemctl daemon-reload

|

||||||

|

[root@master ~]# systemctl start containerd

|

||||||

|

|

||||||

|

第二步:Installing runc

|

||||||

|

链接地址:https://github.com/opencontainers/runc/releases

|

||||||

|

[root@master ~]# wget https://github.com/opencontainers/runc/releases/download/v1.1.4/runc.amd64

|

||||||

|

[root@master ~]# install -m 755 runc.amd64 /usr/local/sbin/runc

|

||||||

|

|

||||||

|

第三步:Installing CNI plugins

|

||||||

|

链接地址:https://github.com/containernetworking/plugins/releases

|

||||||

|

[root@master ~]# wget https://github.com/containernetworking/plugins/releases/download/v1.1.1/cni-plugins-linux-amd64-v1.1.1.tgz

|

||||||

|

[root@master ~]# mkdir -p /opt/cni/bin

|

||||||

|

[root@master ~]# tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.1.1.tgz

|

||||||

|

./

|

||||||

|

./macvlan

|

||||||

|

./static

|

||||||

|

./vlan

|

||||||

|

./portmap

|

||||||

|

./host-local

|

||||||

|

./vrf

|

||||||

|

./bridge

|

||||||

|

./tuning

|

||||||

|

./firewall

|

||||||

|

./host-device

|

||||||

|

./sbr

|

||||||

|

./loopback

|

||||||

|

./dhcp

|

||||||

|

./ptp

|

||||||

|

./ipvlan

|

||||||

|

./bandwidth

|

||||||

|

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 6.安装kubeadm和kubelet

|

||||||

|

|

||||||

|

```shell

|

||||||

|

国外仓库:

|

||||||

|

[root@master ~]# cat >> /etc/yum.repos.d/kubernetes.repo <<eof

|

||||||

|

[kubernetes]

|

||||||

|

name=Kubernetes

|

||||||

|

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

|

||||||

|

enabled=1

|

||||||

|

gpgcheck=1

|

||||||

|

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

|

||||||

|

exclude=kubelet kubeadm kubectl

|

||||||

|

eof

|

||||||

|

[root@master ~]# yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

|

||||||

|

|

||||||

|

国内仓库:

|

||||||

|

[root@master ~]# cat >> /etc/yum.repos.d/kubernetes.repo <<eof

|

||||||

|

[kubernetes]

|

||||||

|

name=Kubernetes

|

||||||

|

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

|

||||||

|

enabled=1

|

||||||

|

gpgcheck=0

|

||||||

|

repo_gpgcheck=0

|

||||||

|

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

|

||||||

|

eof

|

||||||

|

[root@master ~]# yum -y install kubeadm kubelet kubectl ipvsadm

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

这里安装的是最新版本(也可以指定版本号:kubeadm-1.25.0)

|

||||||

|

|

||||||

|

修改kubelet配置

|

||||||

|

[root@master ~]# cat /etc/sysconfig/kubelet

|

||||||

|

KUBELET_EXTRA_ARGS="--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.6"

|

||||||

|

|

||||||

|

[root@master ~]# cat /etc/systemd/system/multi-user.target.wants/kubelet.service

|

||||||

|

[Unit]

|

||||||

|

Description=kubelet: The Kubernetes Node Agent

|

||||||

|

Documentation=https://kubernetes.io/docs/

|

||||||

|

Wants=network-online.target

|

||||||

|

After=network-online.target

|

||||||

|

|

||||||

|

[Service]

|

||||||

|

ExecStart=/usr/bin/kubelet \

|

||||||

|

--container-runtime=remote \

|

||||||

|

--container-runtime-endpoint=unix:///run//containerd/containerd.sock

|

||||||

|

Restart=always

|

||||||

|

StartLimitInterval=0

|

||||||

|

RestartSec=10

|

||||||

|

|

||||||

|

[Install]

|

||||||

|

WantedBy=multi-user.target

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 7.加载内核模块

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# modprobe br_netfilter

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 8.修改内核参数

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# cat >> /etc/sysctl.conf <<eof

|

||||||

|

net.bridge.bridge-nf-call-ip6tables = 1

|

||||||

|

net.bridge.bridge-nf-call-iptables = 1

|

||||||

|

vm.swappiness=0

|

||||||

|

net.ipv4.ip_forward=1

|

||||||

|

eof

|

||||||

|

[root@master ~]# sysctl -p

|

||||||

|

net.bridge.bridge-nf-call-ip6tables = 1

|

||||||

|

net.bridge.bridge-nf-call-iptables = 1

|

||||||

|

vm.swappiness = 0

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

```

|

||||||

|

|

||||||

|

## 二:部署Kubernetes

|

||||||

|

|

||||||

|

#### 1.镜像下载

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# cat image.sh

|

||||||

|

ctr -n k8s.io i pull -k registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.4-0

|

||||||

|

ctr -n k8s.io i pull -k registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.9.3

|

||||||

|

ctr -n k8s.io i pull -k registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.25.0

|

||||||

|

ctr -n k8s.io i pull -k registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.25.0

|

||||||

|

ctr -n k8s.io i pull -k registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.25.0

|

||||||

|

ctr -n k8s.io i pull -k registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.25.0

|

||||||

|

ctr -n k8s.io i pull -k registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

|

||||||

|

ctr -n k8s.io i pull -k registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8

|

||||||

|

|

||||||

|

#flannel镜像

|

||||||

|

ctr -n k8s.io i pull docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

|

||||||

|

ctr -n k8s.io i pull docker.io/rancher/mirrored-flannelcni-flannel:v0.19.2

|

||||||

|

|

||||||

|

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.4-0 registry.k8s.io/etcd:3.5.4-0

|

||||||

|

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.9.3 registry.k8s.io/coredns/coredns:v1.9.3

|

||||||

|

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8 k8s.gcr.io/pause:3.6

|

||||||

|

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.25.0 registry.k8s.io/kube-proxy:v1.25.0

|

||||||

|

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.25.0 registry.k8s.io/kube-scheduler:v1.25.0

|

||||||

|

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.25.0 registry.k8s.io/kube-controller-manager:v1.25.0

|

||||||

|

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.25.0 registry.k8s.io/kube-apiserver:v1.25.0

|

||||||

|

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8 registry.k8s.io/pause:3.8

|

||||||

|

[root@master ~]# bash image.sh

|

||||||

|

注意:

|

||||||

|

所有节点操作

|

||||||

|

|

||||||

|

注意:

|

||||||

|

[root@master ~]# kubeadm config images list //获取所需要的镜像

|

||||||

|

registry.k8s.io/kube-apiserver:v1.25.0

|

||||||

|

registry.k8s.io/kube-controller-manager:v1.25.0

|

||||||

|

registry.k8s.io/kube-scheduler:v1.25.0

|

||||||

|

registry.k8s.io/kube-proxy:v1.25.0

|

||||||

|

registry.k8s.io/pause:3.8

|

||||||

|

registry.k8s.io/etcd:3.5.4-0

|

||||||

|

registry.k8s.io/coredns/coredns:v1.9.3

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 3.master节点初始化

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# kubeadm init --kubernetes-version=1.23.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=10.0.0.220

|

||||||

|

|

||||||

|

Your Kubernetes control-plane has initialized successfully!

|

||||||

|

|

||||||

|

To start using your cluster, you need to run the following as a regular user:

|

||||||

|

|

||||||

|

mkdir -p $HOME/.kube

|

||||||

|

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

|

||||||

|

sudo chown $(id -u):$(id -g) $HOME/.kube/config

|

||||||

|

|

||||||

|

Alternatively, if you are the root user, you can run:

|

||||||

|

|

||||||

|

export KUBECONFIG=/etc/kubernetes/admin.conf

|

||||||

|

|

||||||

|

You should now deploy a pod network to the cluster.

|

||||||

|

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

|

||||||

|

https://kubernetes.io/docs/concepts/cluster-administration/addons/

|

||||||

|

|

||||||

|

Then you can join any number of worker nodes by running the following on each as root:

|

||||||

|

|

||||||

|

kubeadm join 10.0.2.150:6443 --token tlohed.bwgh4tt5erbc2zhg \

|

||||||

|

--discovery-token-ca-cert-hash sha256:9b28238c55bf5b2e5e0d68c6303e50bf6f12e7e07126897c846a4f5328157e16

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 4.安装pod插件

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# vi kube-flannel.yaml

|

||||||

|

---

|

||||||

|

kind: Namespace

|

||||||

|

apiVersion: v1

|

||||||

|

metadata:

|

||||||

|

name: kube-flannel

|

||||||

|

labels:

|

||||||

|

pod-security.kubernetes.io/enforce: privileged

|

||||||

|

---

|

||||||

|

kind: ClusterRole

|

||||||

|

apiVersion: rbac.authorization.k8s.io/v1

|

||||||

|

metadata:

|

||||||

|

name: flannel

|

||||||

|

rules:

|

||||||

|

- apiGroups:

|

||||||

|

- ""

|

||||||

|

resources:

|

||||||

|

- pods

|

||||||

|

verbs:

|

||||||

|

- get

|

||||||

|

- apiGroups:

|

||||||

|

- ""

|

||||||

|

resources:

|

||||||

|

- nodes

|

||||||

|

verbs:

|

||||||

|

- list

|

||||||

|

- watch

|

||||||

|

- apiGroups:

|

||||||

|

- ""

|

||||||

|

resources:

|

||||||

|

- nodes/status

|

||||||

|

verbs:

|

||||||

|

- patch

|

||||||

|

---

|

||||||

|

kind: ClusterRoleBinding

|

||||||

|

apiVersion: rbac.authorization.k8s.io/v1

|

||||||

|

metadata:

|

||||||

|

name: flannel

|

||||||

|

roleRef:

|

||||||

|

apiGroup: rbac.authorization.k8s.io

|

||||||

|

kind: ClusterRole

|

||||||

|

name: flannel

|

||||||

|

subjects:

|

||||||

|

- kind: ServiceAccount

|

||||||

|

name: flannel

|

||||||

|

namespace: kube-flannel

|

||||||

|

---

|

||||||

|

apiVersion: v1

|

||||||

|

kind: ServiceAccount

|

||||||

|

metadata:

|

||||||

|

name: flannel

|

||||||

|

namespace: kube-flannel

|

||||||

|

---

|

||||||

|

kind: ConfigMap

|

||||||

|

apiVersion: v1

|

||||||

|

metadata:

|

||||||

|

name: kube-flannel-cfg

|

||||||

|

namespace: kube-flannel

|

||||||

|

labels:

|

||||||

|

tier: node

|

||||||

|

app: flannel

|

||||||

|

data:

|

||||||

|

cni-conf.json: |

|

||||||

|

{

|

||||||

|

"name": "cbr0",

|

||||||

|

"cniVersion": "0.3.1",

|

||||||

|

"plugins": [

|

||||||

|

{

|

||||||

|

"type": "flannel",

|

||||||

|

"delegate": {

|

||||||

|

"hairpinMode": true,

|

||||||

|

"isDefaultGateway": true

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"type": "portmap",

|

||||||

|

"capabilities": {

|

||||||

|

"portMappings": true

|

||||||

|

}

|

||||||

|

}

|

||||||

|

]

|

||||||

|

}

|

||||||

|

net-conf.json: |

|

||||||

|

{

|

||||||

|

"Network": "10.244.0.0/16",

|

||||||

|

"Backend": {

|

||||||

|

"Type": "vxlan"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

---

|

||||||

|

apiVersion: apps/v1

|

||||||

|

kind: DaemonSet

|

||||||

|

metadata:

|

||||||

|

name: kube-flannel-ds

|

||||||

|

namespace: kube-flannel

|

||||||

|

labels:

|

||||||

|

tier: node

|

||||||

|

app: flannel

|

||||||

|

spec:

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

app: flannel

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

tier: node

|

||||||

|

app: flannel

|

||||||

|

spec:

|

||||||

|

affinity:

|

||||||

|

nodeAffinity:

|

||||||

|

requiredDuringSchedulingIgnoredDuringExecution:

|

||||||

|

nodeSelectorTerms:

|

||||||

|

- matchExpressions:

|

||||||

|

- key: kubernetes.io/os

|

||||||

|

operator: In

|

||||||

|

values:

|

||||||

|

- linux

|

||||||

|

hostNetwork: true

|

||||||

|

priorityClassName: system-node-critical

|

||||||

|

tolerations:

|

||||||

|

- operator: Exists

|

||||||

|

effect: NoSchedule

|

||||||

|

serviceAccountName: flannel

|

||||||

|

initContainers:

|

||||||

|

- name: install-cni-plugin

|

||||||

|

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

|

||||||

|

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

|

||||||

|

command:

|

||||||

|

- cp

|

||||||

|

args:

|

||||||

|

- -f

|

||||||

|

- /flannel

|

||||||

|

- /opt/cni/bin/flannel

|

||||||

|

volumeMounts:

|

||||||

|

- name: cni-plugin

|

||||||

|

mountPath: /opt/cni/bin

|

||||||

|

- name: install-cni

|

||||||

|

#image: flannelcni/flannel:v0.19.2 for ppc64le and mips64le (dockerhub limitations may apply)

|

||||||

|

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.2

|

||||||

|

command:

|

||||||

|

- cp

|

||||||

|

args:

|

||||||

|

- -f

|

||||||

|

- /etc/kube-flannel/cni-conf.json

|

||||||

|

- /etc/cni/net.d/10-flannel.conflist

|

||||||

|

volumeMounts:

|

||||||

|

- name: cni

|

||||||

|

mountPath: /etc/cni/net.d

|

||||||

|

- name: flannel-cfg

|

||||||

|

mountPath: /etc/kube-flannel/

|

||||||

|

containers:

|

||||||

|

- name: kube-flannel

|

||||||

|

#image: flannelcni/flannel:v0.19.2 for ppc64le and mips64le (dockerhub limitations may apply)

|

||||||

|

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.2

|

||||||

|

command:

|

||||||

|

- /opt/bin/flanneld

|

||||||

|

args:

|

||||||

|

- --ip-masq

|

||||||

|

- --kube-subnet-mgr

|

||||||

|

resources:

|

||||||

|

requests:

|

||||||

|

cpu: "100m"

|

||||||

|

memory: "50Mi"

|

||||||

|

limits:

|

||||||

|

cpu: "100m"

|

||||||

|

memory: "50Mi"

|

||||||

|

securityContext:

|

||||||

|

privileged: false

|

||||||

|

capabilities:

|

||||||

|

add: ["NET_ADMIN", "NET_RAW"]

|

||||||

|

env:

|

||||||

|

- name: POD_NAME

|

||||||

|

valueFrom:

|

||||||

|

fieldRef:

|

||||||

|

fieldPath: metadata.name

|

||||||

|

- name: POD_NAMESPACE

|

||||||

|

valueFrom:

|

||||||

|

fieldRef:

|

||||||

|

fieldPath: metadata.namespace

|

||||||

|

- name: EVENT_QUEUE_DEPTH

|

||||||

|

value: "5000"

|

||||||

|

volumeMounts:

|

||||||

|

- name: run

|

||||||

|

mountPath: /run/flannel

|

||||||

|

- name: flannel-cfg

|

||||||

|

mountPath: /etc/kube-flannel/

|

||||||

|

- name: xtables-lock

|

||||||

|

mountPath: /run/xtables.lock

|

||||||

|

volumes:

|

||||||

|

- name: run

|

||||||

|

hostPath:

|

||||||

|

path: /run/flannel

|

||||||

|

- name: cni-plugin

|

||||||

|

hostPath:

|

||||||

|

path: /opt/cni/bin

|

||||||

|

- name: cni

|

||||||

|

hostPath:

|

||||||

|

path: /etc/cni/net.d

|

||||||

|

- name: flannel-cfg

|

||||||

|

configMap:

|

||||||

|

name: kube-flannel-cfg

|

||||||

|

- name: xtables-lock

|

||||||

|

hostPath:

|

||||||

|

path: /run/xtables.lock

|

||||||

|

type: FileOrCreate

|

||||||

|

[root@master ~]# kubectl create -f kube-flannel.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 5.将node加入工作节点

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@node-1 ~]# kubeadm join 10.0.0.220:6443 --token mzrm3c.u9mpt80rddmjvd3g --discovery-token-ca-cert-hash sha256:fec53dfeacc5187d3f0e3998d65bd3e303fa64acd5156192240728567659bf4a

|

||||||

|

注意:

|

||||||

|

这里使用的是master初始化产生的token

|

||||||

|

这里的token时间长了会改变,需要使用命令获取,见下期内容

|

||||||

|

没有记录集群 join 命令的可以通过以下方式重新获取:

|

||||||

|

kubeadm token create --print-join-command --ttl=0

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 6.master节点查看集群状态

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# kubectl get node

|

||||||

|

NAME STATUS ROLES AGE VERSION

|

||||||

|

master Ready control-plane 7m v1.25.0

|

||||||

|

node-1 Ready <none> 2m7s v1.25.0

|

||||||

|

node-2 Ready <none> 50s v1.25.0

|

||||||

|

node-3 Ready <none> 110s v1.25.0

|

||||||

|

```

|

||||||

|

|

||||||

|

## 三:部署Dashboard

|

||||||

|

|

||||||

|

#### 1.kube-proxy 开启 ipvs

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# kubectl get configmap kube-proxy -n kube-system -o yaml > kube-proxy-configmap.yaml

|

||||||

|

[root@master ~]# sed -i 's/mode: ""/mode: "ipvs"/' kube-proxy-configmap.yaml

|

||||||

|

[root@master ~]# kubectl apply -f kube-proxy-configmap.yaml

|

||||||

|

[root@master ~]# rm -f kube-proxy-configmap.yaml

|

||||||

|

[root@master ~]# kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.Dashboard安装脚本

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# cat dashboard.yaml

|

||||||

|

# Copyright 2017 The Kubernetes Authors.

|

||||||

|

#

|

||||||

|

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

# you may not use this file except in compliance with the License.

|

||||||

|

# You may obtain a copy of the License at

|

||||||

|

#

|

||||||

|

# http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

#

|

||||||

|

# Unless required by applicable law or agreed to in writing, software

|

||||||

|

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

# See the License for the specific language governing permissions and

|

||||||

|

# limitations under the License.

|

||||||

|

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Namespace

|

||||||

|

metadata:

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

apiVersion: v1

|

||||||

|

kind: ServiceAccount

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

kind: Service

|

||||||

|

apiVersion: v1

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

spec:

|

||||||

|

type: NodePort

|

||||||

|

ports:

|

||||||

|

- port: 443

|

||||||

|

targetPort: 8443

|

||||||

|

selector:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Secret

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard-certs

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

type: Opaque

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Secret

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard-csrf

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

type: Opaque

|

||||||

|

data:

|

||||||

|

csrf: ""

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Secret

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard-key-holder

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

type: Opaque

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

kind: ConfigMap

|

||||||

|

apiVersion: v1

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard-settings

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

kind: Role

|

||||||

|

apiVersion: rbac.authorization.k8s.io/v1

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

rules:

|

||||||

|

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

|

||||||

|

- apiGroups: [""]

|

||||||

|

resources: ["secrets"]

|

||||||

|

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

|

||||||

|

verbs: ["get", "update", "delete"]

|

||||||

|

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

|

||||||

|

- apiGroups: [""]

|

||||||

|

resources: ["configmaps"]

|

||||||

|

resourceNames: ["kubernetes-dashboard-settings"]

|

||||||

|

verbs: ["get", "update"]

|

||||||

|

# Allow Dashboard to get metrics.

|

||||||

|

- apiGroups: [""]

|

||||||

|

resources: ["services"]

|

||||||

|

resourceNames: ["heapster", "dashboard-metrics-scraper"]

|

||||||

|

verbs: ["proxy"]

|

||||||

|

- apiGroups: [""]

|

||||||

|

resources: ["services/proxy"]

|

||||||

|

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

|

||||||

|

verbs: ["get"]

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

kind: ClusterRole

|

||||||

|

apiVersion: rbac.authorization.k8s.io/v1

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

rules:

|

||||||

|

# Allow Metrics Scraper to get metrics from the Metrics server

|

||||||

|

- apiGroups: ["metrics.k8s.io"]

|

||||||

|

resources: ["pods", "nodes"]

|

||||||

|

verbs: ["get", "list", "watch"]

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

apiVersion: rbac.authorization.k8s.io/v1

|

||||||

|

kind: RoleBinding

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

roleRef:

|

||||||

|

apiGroup: rbac.authorization.k8s.io

|

||||||

|

kind: Role

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

subjects:

|

||||||

|

- kind: ServiceAccount

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

apiVersion: rbac.authorization.k8s.io/v1

|

||||||

|

kind: ClusterRoleBinding

|

||||||

|

metadata:

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

roleRef:

|

||||||

|

apiGroup: rbac.authorization.k8s.io

|

||||||

|

kind: ClusterRole

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

subjects:

|

||||||

|

- kind: ServiceAccount

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

kind: Deployment

|

||||||

|

apiVersion: apps/v1

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: kubernetes-dashboard

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

spec:

|

||||||

|

replicas: 1

|

||||||

|

revisionHistoryLimit: 10

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

spec:

|

||||||

|

securityContext:

|

||||||

|

seccompProfile:

|

||||||

|

type: RuntimeDefault

|

||||||

|

containers:

|

||||||

|

- name: kubernetes-dashboard

|

||||||

|

image: kubernetesui/dashboard:v2.6.1

|

||||||

|

imagePullPolicy: Always

|

||||||

|

ports:

|

||||||

|

- containerPort: 8443

|

||||||

|

protocol: TCP

|

||||||

|

args:

|

||||||

|

- --auto-generate-certificates

|

||||||

|

- --namespace=kubernetes-dashboard

|

||||||

|

# Uncomment the following line to manually specify Kubernetes API server Host

|