上传文件至 'MD'

This commit is contained in:

parent

dd682a07cb

commit

d1ca05a371

204

MD/kubernetes健康检查机制.md

Normal file

204

MD/kubernetes健康检查机制.md

Normal file

@ -0,0 +1,204 @@

|

|||||||

|

<h1><center>Kubernetes健康检查机制</center></h1>

|

||||||

|

|

||||||

|

著作:行癫 <盗版必究>

|

||||||

|

|

||||||

|

------

|

||||||

|

|

||||||

|

## 一:检查恢复机制

|

||||||

|

|

||||||

|

#### 1.容器健康检查和恢复机制

|

||||||

|

|

||||||

|

在 k8s 中,可以为 Pod 里的容器定义一个健康检查"探针"。kubelet 就会根据这个 Probe 的返回值决定这个容器的状态,而不是直接以容器是否运行作为依据。这种机制,是生产环境中保证应用健康存活的重要手段。

|

||||||

|

|

||||||

|

#### 2.命令模式探针

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Pod

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

test: liveness

|

||||||

|

name: test-liveness-exec

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: liveness

|

||||||

|

image: daocloud.io/library/nginx

|

||||||

|

args:

|

||||||

|

- /bin/sh

|

||||||

|

- -c

|

||||||

|

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

|

||||||

|

livenessProbe:

|

||||||

|

exec:

|

||||||

|

command:

|

||||||

|

- cat

|

||||||

|

- /tmp/healthy

|

||||||

|

initialDelaySeconds: 5

|

||||||

|

periodSeconds: 5

|

||||||

|

```

|

||||||

|

|

||||||

|

它在启动之后做的第一件事是在 /tmp 目录下创建了一个 healthy 文件,以此作为自己已经正常运行的标志。而 30 s 过后,它会把这个文件删除掉

|

||||||

|

|

||||||

|

与此同时,定义了一个这样的 livenessProbe(健康检查)。它的类型是 exec,它会在容器启动后,在容器里面执行一句我们指定的命令,比如:"cat /tmp/healthy"。这时,如果这个文件存在,这条命令的返回值就是 0,Pod 就会认为这个容器不仅已经启动,而且是健康的。这个健康检查,在容器启动 5 s 后开始执行(initialDelaySeconds: 5),每 5 s 执行一次(periodSeconds: 5)

|

||||||

|

|

||||||

|

创建Pod:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl create -f test-liveness-exec.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

查看 Pod 的状态:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl get pod

|

||||||

|

NAME READY STATUS RESTARTS AGE

|

||||||

|

test-liveness-exec 1/1 Running 0 10s

|

||||||

|

```

|

||||||

|

|

||||||

|

由于已经通过了健康检查,这个 Pod 就进入了 Running 状态

|

||||||

|

|

||||||

|

30 s 之后,再查看一下 Pod 的 Events:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl describe pod test-liveness-exec

|

||||||

|

```

|

||||||

|

|

||||||

|

发现,这个 Pod 在 Events 报告了一个异常:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

FirstSeen LastSeen Count From SubobjectPath Type Reason Message

|

||||||

|

--------- -------- ----- ---- ------------- -------- ------ -------

|

||||||

|

2s 2s 1 {kubelet worker0} spec.containers{liveness} Warning Unhealthy Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directory

|

||||||

|

```

|

||||||

|

|

||||||

|

显然,这个健康检查探查到 /tmp/healthy 已经不存在了,所以它报告容器是不健康的。那么接下来会发生什么呢?

|

||||||

|

|

||||||

|

再次查看一下这个 Pod 的状态:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl get pod test-liveness-exec

|

||||||

|

NAME READY STATUS RESTARTS AGE

|

||||||

|

liveness-exec 1/1 Running 1 1m

|

||||||

|

```

|

||||||

|

|

||||||

|

这时发现,Pod 并没有进入 Failed 状态,而是保持了 Running 状态。这是为什么呢?

|

||||||

|

|

||||||

|

RESTARTS 字段从 0 到 1 的变化,就明白原因了:这个异常的容器已经被 Kubernetes 重启了。在这个过程中,Pod 保持 Running 状态不变

|

||||||

|

|

||||||

|

注意:

|

||||||

|

|

||||||

|

Kubernetes 中并没有 Docker 的 Stop 语义。所以虽然是 Restart(重启),但实际却是重新创建了容器

|

||||||

|

|

||||||

|

这个功能就是 Kubernetes 里的Pod 恢复机制,也叫 restartPolicy。它是 Pod 的 Spec 部分的一个标准字段(pod.spec.restartPolicy),默认值是 Always,即:任何时候这个容器发生了异常,它一定会被重新创建

|

||||||

|

|

||||||

|

小提示:

|

||||||

|

|

||||||

|

Pod 的恢复过程,永远都是发生在当前节点上,而不会跑到别的节点上去。事实上,一旦一个 Pod 与一个节点(Node)绑定,除非这个绑定发生了变化(pod.spec.node 字段被修改),否则它永远都不会离开这个节点。这也就意味着,如果这个宿主机宕机了,这个 Pod 也不会主动迁移到其他节点上去。

|

||||||

|

|

||||||

|

而如果你想让 Pod 出现在其他的可用节点上,就必须使用 Deployment 这样的"控制器"来管理 Pod,哪怕你只需要一个 Pod 副本。这就是一个单 Pod 的 Deployment 与一个 Pod 最主要的区别。

|

||||||

|

|

||||||

|

#### 3.http get方式探针

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# vim liveness-httpget.yaml

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Pod

|

||||||

|

metadata:

|

||||||

|

name: liveness-httpget-pod

|

||||||

|

namespace: default

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: liveness-exec-container

|

||||||

|

image: daocloud.io/library/nginx

|

||||||

|

imagePullPolicy: IfNotPresent

|

||||||

|

ports:

|

||||||

|

- name: http

|

||||||

|

containerPort: 80

|

||||||

|

livenessProbe:

|

||||||

|

httpGet:

|

||||||

|

port: http

|

||||||

|

path: /index.html

|

||||||

|

initialDelaySeconds: 1

|

||||||

|

periodSeconds: 3

|

||||||

|

```

|

||||||

|

|

||||||

|

创建该pod:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl create -f liveness-httpget.yaml

|

||||||

|

pod/liveness-httpget-pod created

|

||||||

|

```

|

||||||

|

|

||||||

|

查看当前pod的状态:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl describe pod liveness-httpget-pod

|

||||||

|

...

|

||||||

|

Liveness: http-get http://:http/index.html delay=1s timeout=1s period=3s #success=1 #failure=3

|

||||||

|

...

|

||||||

|

```

|

||||||

|

|

||||||

|

测试将容器内的index.html删除掉:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl exec liveness-httpget-pod -c liveness-exec-container -it -- /bin/sh

|

||||||

|

/ # ls

|

||||||

|

bin dev etc home lib media mnt proc root run sbin srv sys tmp usr var

|

||||||

|

/ # mv /usr/share/nginx/html/index.html index.html

|

||||||

|

/ # command terminated with exit code 137

|

||||||

|

```

|

||||||

|

|

||||||

|

可以看到,当把index.html移走后,这个容器立马就退出了

|

||||||

|

|

||||||

|

查看pod的信息:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl describe pod liveness-httpget-pod

|

||||||

|

...

|

||||||

|

Normal Killing 1m kubelet, node02 Killing container with id docker://liveness-exec-container:Container failed liveness probe.. Container will be killed and recreated.

|

||||||

|

...

|

||||||

|

```

|

||||||

|

|

||||||

|

看输出,容器由于健康检查未通过,pod会被杀掉,并重新创建:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl get pods

|

||||||

|

NAME READY STATUS RESTARTS AGE

|

||||||

|

liveness-httpget-pod 1/1 Running 1 33m

|

||||||

|

restarts 为 1

|

||||||

|

```

|

||||||

|

|

||||||

|

重新登陆容器查看:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl exec liveness-httpget-pod -c liveness-exec-container -it -- /bin/sh

|

||||||

|

/ # cat /usr/share/nginx/html/index.html

|

||||||

|

```

|

||||||

|

|

||||||

|

新登陆容器,发现index.html又出现了,证明容器是被重拉了

|

||||||

|

|

||||||

|

#### 4.Pod 的恢复策略

|

||||||

|

|

||||||

|

可以通过设置 restartPolicy,改变 Pod 的恢复策略。一共有3种:

|

||||||

|

|

||||||

|

Always:在任何情况下,只要容器不在运行状态,就自动重启容器

|

||||||

|

|

||||||

|

OnFailure:只在容器异常时才自动重启容器

|

||||||

|

|

||||||

|

Never: 从来不重启容器

|

||||||

|

|

||||||

|

注意:

|

||||||

|

|

||||||

|

官方文档把 restartPolicy 和 Pod 里容器的状态,以及 Pod 状态的对应关系,总结了非常复杂的一大堆情况。实际上,你根本不需要死记硬背这些对应关系,只要记住如下两个基本的设计原理即可:

|

||||||

|

|

||||||

|

只要 Pod 的 restartPolicy 指定的策略允许重启异常的容器(比如:Always),那么这个 Pod 就会保持 Running 状态,并进行容器重启。否则,Pod 就会进入 Failed 状态

|

||||||

|

|

||||||

|

对于包含多个容器的 Pod,只有它里面所有的容器都进入异常状态后,Pod 才会进入 Failed 状态。在此之前,Pod 都是 Running 状态。此时,Pod 的 READY 字段会显示正常容器的个数

|

||||||

|

|

||||||

|

例如:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master diandian]# kubectl get pod test-liveness-exec

|

||||||

|

NAME READY STATUS RESTARTS AGE

|

||||||

|

liveness-exec 0/1 Running 1 1m

|

||||||

|

```

|

||||||

|

|

||||||

84

MD/kubernetes基础架构.md

Normal file

84

MD/kubernetes基础架构.md

Normal file

@ -0,0 +1,84 @@

|

|||||||

|

<h1><center>Kubernetes基础架构</center></h1>

|

||||||

|

|

||||||

|

著作:行癫 <盗版必究>

|

||||||

|

|

||||||

|

------

|

||||||

|

|

||||||

|

<h2>一:Kubernetes简介</h2>

|

||||||

|

|

||||||

|

<h3>1.简介</h3>

|

||||||

|

|

||||||

|

Kubernetes是谷歌严格保密十几年的秘密武器Borg的一个开源版本,是容器分布式系统解决方案;是一个可移植的、可扩展的开源平台,用于管理容器化的工作负载和服务,可促进声明式配置和自动化;拥有一个庞大且快速增长的生态系统。

|

||||||

|

|

||||||

|

<h3>2.Kubernetes能做什么</h3>

|

||||||

|

|

||||||

|

使用现代的Web服务,用户希望应用程序可以24/7全天候可用,而开发人员则希望每天多次部署这些应用程序的新版本;容器化有助于打包软件来实现这些目标,从而使应用程序可以轻松快速地发布和更新,而无需停机;可帮助您确保那些容器化的应用程序在所需的位置和时间运行,并帮助他们找到工作所需的资源和工具。

|

||||||

|

|

||||||

|

<h3>3.kubernetes组件</h3>

|

||||||

|

|

||||||

|

kube-apiserver: 负责 API 服务

|

||||||

|

|

||||||

|

kube-scheduler: 负责调度

|

||||||

|

|

||||||

|

kube-controller-manager: 负责容器编排

|

||||||

|

|

||||||

|

kubelet:它与Kubernetes Master进行通信

|

||||||

|

|

||||||

|

kube-proxy:一个网络代理,可反映每个节点上的Kubernetes网络服务

|

||||||

|

|

||||||

|

<h3>5.Kubernetes 的顶层设计</h3>

|

||||||

|

|

||||||

|

<img src="https://xingdian-image.oss-cn-beijing.aliyuncs.com/xingdian-image/nDWtBAVlo7IxRXbecbQ4QA.png" alt="img" style="zoom:50%;" />

|

||||||

|

|

||||||

|

<h3>6. 为什么 Kubernetes 如此有用</h3>

|

||||||

|

|

||||||

|

**传统部署时代:**

|

||||||

|

|

||||||

|

早期,组织在物理服务器上运行应用程序;无法为物理服务器中的应用程序定义资源边界,这会导致资源分配问题;例如,如果在物理服务器上运行多个应用程序,则可能会出现一个应用程序占用大部分资源的情况,结果可能导致其他应用程序的性能下降。一种解决方案是在不同的物理服务器上运行每个应用程序,但是由于资源利用不足而无法扩展,并且组织维护许多物理服务器的成本很高。

|

||||||

|

|

||||||

|

**虚拟化部署时代:**

|

||||||

|

|

||||||

|

作为解决方案,引入了虚拟化功能,它允许您在单个物理服务器的 CPU 上运行多个虚拟机(VM)。虚拟化功能允许应用程序在 VM 之间隔离,并提供安全级别,因为一个应用程序的信息不能被另一应用程序自由地访问。 因为虚拟化可以轻松地添加或更新应用程序、降低硬件成本等等,所以虚拟化可以更好地利用物理服务器中的资源,并可以实现更好的可伸缩性。 每个 VM 是一台完整的计算机,在虚拟化硬件之上运行所有组件,包括其自己的操作系统。

|

||||||

|

|

||||||

|

**容器部署时代:**

|

||||||

|

|

||||||

|

容器类似于 VM,但是它们具有轻量级的隔离属性,可以在应用程序之间共享操作系统(OS)。因此,容器被认为是轻量级的。容器与 VM 类似,具有自己的文件系统、CPU、内存、进程空间等。由于它们与基础架构分离,因此可以跨云和 OS 分发进行移植。

|

||||||

|

|

||||||

|

容器是打包和运行应用程序的好方式。在生产环境中,您需要管理运行应用程序的容器,并确保不会停机。例如,如果一个容器发生故障,则需要启动另一个容器。

|

||||||

|

|

||||||

|

Kubernetes 为您提供了一个可弹性运行分布式系统的框架。Kubernetes 会满足您的扩展要求、故障转移、部署模式等。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

123

MD/kubernetes污点与容忍.md

Normal file

123

MD/kubernetes污点与容忍.md

Normal file

@ -0,0 +1,123 @@

|

|||||||

|

<h1><center>kubernetes污点与容忍</center></h1>

|

||||||

|

|

||||||

|

著作:行癫 <盗版必究>

|

||||||

|

|

||||||

|

------

|

||||||

|

|

||||||

|

## 一:污点与容忍

|

||||||

|

|

||||||

|

对于nodeAffinity无论是硬策略还是软策略方式,都是调度POD到预期节点上,而Taints恰好与之相反,如果一个节点标记为Taints ,除非 POD 也被标识为可以容忍污点节点,否则该 Taints 节点不会被调度pod;比如用户希望把 Master 节点保留给 Kubernetes 系统组件使用,或者把一组具有特殊资源预留给某些 POD,则污点就很有用了,POD 不会再被调度到 taint 标记过的节点

|

||||||

|

|

||||||

|

#### 1.将节点设置为污点

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl taint node node-2 key=value:NoSchedule

|

||||||

|

node/node-2 tainted

|

||||||

|

```

|

||||||

|

|

||||||

|

查看污点:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl describe node node-1 | grep Taint

|

||||||

|

Taints: <none>

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.去除节点污点

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl taint node node-2 key=value:NoSchedule-

|

||||||

|

node/node-2 untainted

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 3.污点分类

|

||||||

|

|

||||||

|

NoSchedule:新的不能容忍的pod不能再调度过来,但是之前运行在node节点中的Pod不受影响

|

||||||

|

|

||||||

|

NoExecute:新的不能容忍的pod不能调度过来,老的pod也会被驱逐

|

||||||

|

|

||||||

|

PreferNoScheduler:表示尽量不调度到污点节点中去

|

||||||

|

|

||||||

|

#### 4.使用

|

||||||

|

|

||||||

|

如果仍然希望某个 POD 调度到 taint 节点上,则必须在 Spec 中做出Toleration定义,才能调度到该节点,举例如下:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl taint node node-2 key=value:NoSchedule

|

||||||

|

node/node-2 tainted

|

||||||

|

[root@master yaml]# cat b.yaml

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Pod

|

||||||

|

metadata:

|

||||||

|

name: sss

|

||||||

|

spec:

|

||||||

|

affinity:

|

||||||

|

nodeAffinity:

|

||||||

|

requiredDuringSchedulingIgnoredDuringExecution:

|

||||||

|

nodeSelectorTerms:

|

||||||

|

- matchExpressions:

|

||||||

|

- key: app

|

||||||

|

operator: In

|

||||||

|

values:

|

||||||

|

- myapp

|

||||||

|

containers:

|

||||||

|

- name: with-node-affinity

|

||||||

|

image: daocloud.io/library/nginx:latest

|

||||||

|

注意:node-2节点设置为污点,所以label定义到node-2,但是因为有污点所以调度失败,以下是新的yaml文件

|

||||||

|

[root@master yaml]# cat b.yaml

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Pod

|

||||||

|

metadata:

|

||||||

|

name: sss-1

|

||||||

|

spec:

|

||||||

|

affinity:

|

||||||

|

nodeAffinity:

|

||||||

|

requiredDuringSchedulingIgnoredDuringExecution:

|

||||||

|

nodeSelectorTerms:

|

||||||

|

- matchExpressions:

|

||||||

|

- key: app

|

||||||

|

operator: In

|

||||||

|

values:

|

||||||

|

- myapp

|

||||||

|

containers:

|

||||||

|

- name: with-node-affinity

|

||||||

|

image: daocloud.io/library/nginx:latest

|

||||||

|

tolerations:

|

||||||

|

- key: "key"

|

||||||

|

operator: "Equal"

|

||||||

|

value: "value"

|

||||||

|

effect: "NoSchedule"

|

||||||

|

```

|

||||||

|

|

||||||

|

结果:旧的调度失败,新的调度成功

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl get pod -o wide

|

||||||

|

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

|

||||||

|

sss 0/1 Pending 0 3m2s <none> <none> <none> <none>

|

||||||

|

sss-1 1/1 Running 0 7s 10.244.2.9 node-2 <none> <none>

|

||||||

|

```

|

||||||

|

|

||||||

|

注意:

|

||||||

|

|

||||||

|

tolerations: #添加容忍策略

|

||||||

|

|

||||||

|

\- key: "key1" #对应我们添加节点的变量名

|

||||||

|

|

||||||

|

operator: "Equal" #操作符

|

||||||

|

|

||||||

|

value: "value" #容忍的值 key1=value对应

|

||||||

|

|

||||||

|

effect: NoExecute #添加容忍的规则,这里必须和我们标记的五点规则相同

|

||||||

|

|

||||||

|

operator值是Exists,则value属性可以忽略

|

||||||

|

|

||||||

|

operator值是Equal,则表示key与value之间的关系是等于

|

||||||

|

|

||||||

|

operator不指定,则默认为Equal

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

395

MD/kubernetes资源对象ConfigMap.md

Normal file

395

MD/kubernetes资源对象ConfigMap.md

Normal file

@ -0,0 +1,395 @@

|

|||||||

|

<h1><center>Kubernetes资源对象ConfigMap</center></h1>

|

||||||

|

|

||||||

|

著作:行癫 <盗版必究>

|

||||||

|

|

||||||

|

------

|

||||||

|

|

||||||

|

## 一:ConfigMap

|

||||||

|

|

||||||

|

用来存储配置文件的kubernetes资源对象,所有的配置内容都存储在etcd中;ConfigMap与 Secret 类似

|

||||||

|

|

||||||

|

#### 1.ConfigMap与 Secret 的区别

|

||||||

|

|

||||||

|

ConfigMap 保存的是不需要加密的、应用所需的配置信息

|

||||||

|

|

||||||

|

ConfigMap 的用法几乎与 Secret 完全相同:可以使用 kubectl create configmap 从文件或者目录创建 ConfigMap,也可以直接编写 ConfigMap 对象的 YAML 文件

|

||||||

|

|

||||||

|

#### 2.创建ConfigMap

|

||||||

|

|

||||||

|

方式1:通过直接在命令行中指定configmap参数创建,即--from-literal

|

||||||

|

|

||||||

|

方式2:通过指定文件创建,即将一个配置文件创建为一个ConfigMap--from-file=<文件>

|

||||||

|

|

||||||

|

方式3:通过指定目录创建,即将一个目录下的所有配置文件创建为一个ConfigMap,--from-file=<目录>

|

||||||

|

|

||||||

|

方式4:事先写好标准的configmap的yaml文件,然后kubectl create -f 创建

|

||||||

|

|

||||||

|

通过命令行参数--from-literal创建:

|

||||||

|

|

||||||

|

创建命令

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl create configmap test-config1 --from-literal=db.host=10.5.10.116 --from-literal=db.port='3306'

|

||||||

|

configmap/test-config1 created

|

||||||

|

```

|

||||||

|

|

||||||

|

结果如下面的data内容所示

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl get configmap test-config1 -o yaml

|

||||||

|

apiVersion: v1

|

||||||

|

data:

|

||||||

|

db.host: 10.5.10.116

|

||||||

|

db.port: "3306"

|

||||||

|

kind: ConfigMap

|

||||||

|

metadata:

|

||||||

|

creationTimestamp: "2019-02-14T08:22:34Z"

|

||||||

|

name: test-config1

|

||||||

|

namespace: default

|

||||||

|

resourceVersion: "7587"

|

||||||

|

selfLink: /api/v1/namespaces/default/configmaps/test-config1

|

||||||

|

uid: adfff64c-3031-11e9-abbe-000c290a5b8b

|

||||||

|

```

|

||||||

|

|

||||||

|

通过指定文件创建:

|

||||||

|

|

||||||

|

编辑配置文件app.properties内容如下

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# cat app.properties

|

||||||

|

property.1 = value-1

|

||||||

|

property.2 = value-2

|

||||||

|

property.3 = value-3

|

||||||

|

property.4 = value-4

|

||||||

|

|

||||||

|

[mysqld]

|

||||||

|

!include /home/wing/mysql/etc/mysqld.cnf

|

||||||

|

port = 3306

|

||||||

|

socket = /home/wing/mysql/tmp/mysql.sock

|

||||||

|

pid-file = /wing/mysql/mysql/var/mysql.pid

|

||||||

|

basedir = /home/mysql/mysql

|

||||||

|

datadir = /wing/mysql/mysql/var

|

||||||

|

```

|

||||||

|

|

||||||

|

创建(可以有多个--from-file)

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl create configmap test-config2 --from-file=./app.properties

|

||||||

|

```

|

||||||

|

|

||||||

|

结果如下面data内容所示

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl get configmap test-config2 -o yaml

|

||||||

|

apiVersion: v1

|

||||||

|

data:

|

||||||

|

app.properties: |

|

||||||

|

property.1 = value-1

|

||||||

|

property.2 = value-2

|

||||||

|

property.3 = value-3

|

||||||

|

property.4 = value-4

|

||||||

|

|

||||||

|

[mysqld]

|

||||||

|

!include /home/wing/mysql/etc/mysqld.cnf

|

||||||

|

port = 3306

|

||||||

|

socket = /home/wing/mysql/tmp/mysql.sock

|

||||||

|

pid-file = /wing/mysql/mysql/var/mysql.pid

|

||||||

|

basedir = /home/mysql/mysql

|

||||||

|

datadir = /wing/mysql/mysql/var

|

||||||

|

kind: ConfigMap

|

||||||

|

metadata:

|

||||||

|

creationTimestamp: "2019-02-14T08:29:33Z"

|

||||||

|

name: test-config2

|

||||||

|

namespace: default

|

||||||

|

resourceVersion: "8176"

|

||||||

|

selfLink: /api/v1/namespaces/default/configmaps/test-config2

|

||||||

|

uid: a8237769-3032-11e9-abbe-000c290a5b8b

|

||||||

|

```

|

||||||

|

|

||||||

|

通过指定文件创建时,configmap会创建一个key/value对,key是文件名,value是文件内容。如不想configmap中的key为默认的文件名,可以在创建时指定key名字

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl create configmap game-config-3 --from-file=<my-key-name>=<path-to-file>

|

||||||

|

```

|

||||||

|

|

||||||

|

指定目录创建:

|

||||||

|

|

||||||

|

configs 目录下的config-1和config-2内容如下所示

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# tail configs/config-1

|

||||||

|

aaa

|

||||||

|

bbb

|

||||||

|

c=d

|

||||||

|

[root@master yaml]# tail configs/config-2

|

||||||

|

eee

|

||||||

|

fff

|

||||||

|

h=k

|

||||||

|

```

|

||||||

|

|

||||||

|

创建

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl create configmap test-config3 --from-file=./configs

|

||||||

|

```

|

||||||

|

|

||||||

|

结果下面data内容所示

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl get configmap test-config3 -o yaml

|

||||||

|

apiVersion: v1

|

||||||

|

data:

|

||||||

|

config-1: |

|

||||||

|

aaa

|

||||||

|

bbb

|

||||||

|

c=d

|

||||||

|

config-2: |

|

||||||

|

eee

|

||||||

|

fff

|

||||||

|

h=k

|

||||||

|

kind: ConfigMap

|

||||||

|

metadata:

|

||||||

|

creationTimestamp: "2019-02-14T08:37:05Z"

|

||||||

|

name: test-config3

|

||||||

|

namespace: default

|

||||||

|

resourceVersion: "8808"

|

||||||

|

selfLink: /api/v1/namespaces/default/configmaps/test-config3

|

||||||

|

uid: b55ffbeb-3033-11e9-abbe-000c290a5b8b

|

||||||

|

```

|

||||||

|

|

||||||

|

指定目录创建时,configmap内容中的各个文件会创建一个key/value对,key是文件名,value是文件内容,忽略子目录

|

||||||

|

|

||||||

|

通过事先写好configmap的标准yaml文件创建:

|

||||||

|

|

||||||

|

yaml文件内容如下: 注意其中一个key的value有多行内容时的写法

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# cat configmap.yaml

|

||||||

|

apiVersion: v1

|

||||||

|

kind: ConfigMap

|

||||||

|

metadata:

|

||||||

|

name: test-config4

|

||||||

|

namespace: default

|

||||||

|

data:

|

||||||

|

cache_host: memcached-gcxt

|

||||||

|

cache_port: "11211"

|

||||||

|

cache_prefix: gcxt

|

||||||

|

my.cnf: |

|

||||||

|

[mysqld]

|

||||||

|

log-bin = mysql-bin

|

||||||

|

haha = hehe

|

||||||

|

```

|

||||||

|

|

||||||

|

创建

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl apply -f configmap.yaml

|

||||||

|

configmap/test-config4 created

|

||||||

|

```

|

||||||

|

|

||||||

|

结果如下面data内容所示

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl get configmap test-config4 -o yaml

|

||||||

|

apiVersion: v1

|

||||||

|

data:

|

||||||

|

cache_host: memcached-gcxt

|

||||||

|

cache_port: "11211"

|

||||||

|

cache_prefix: gcxt

|

||||||

|

my.cnf: |

|

||||||

|

[mysqld]

|

||||||

|

log-bin = mysql-bin

|

||||||

|

haha = hehe

|

||||||

|

kind: ConfigMap

|

||||||

|

metadata:

|

||||||

|

annotations:

|

||||||

|

kubectl.kubernetes.io/last-applied-configuration: |

|

||||||

|

{"apiVersion":"v1","data":{"cache_host":"memcached-gcxt","cache_port":"11211","cache_prefix":"gcxt","my.cnf":"[mysqld]\nlog-bin = mysql-bin\nhaha = hehe\n"},"kind":"ConfigMap","metadata":{"annotations":{},"name":"test-config4","namespace":"default"}}

|

||||||

|

creationTimestamp: "2019-02-14T08:46:57Z"

|

||||||

|

name: test-config4

|

||||||

|

namespace: default

|

||||||

|

resourceVersion: "9639"

|

||||||

|

selfLink: /api/v1/namespaces/default/configmaps/test-config4

|

||||||

|

uid: 163fbe1e-3035-11e9-abbe-000c290a5b8b

|

||||||

|

```

|

||||||

|

|

||||||

|

查看configmap的详细信息

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl describe configmap

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 3.使用ConfigMap

|

||||||

|

|

||||||

|

通过环境变量的方式,直接传递pod

|

||||||

|

|

||||||

|

通过在pod的命令行下运行的方式

|

||||||

|

|

||||||

|

使用volume的方式挂载入到pod内

|

||||||

|

|

||||||

|

示例ConfigMap文件:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: v1

|

||||||

|

kind: ConfigMap

|

||||||

|

metadata:

|

||||||

|

name: special-config

|

||||||

|

namespace: default

|

||||||

|

data:

|

||||||

|

special.how: very

|

||||||

|

special.type: charm

|

||||||

|

```

|

||||||

|

|

||||||

|

通过环境变量使用:

|

||||||

|

|

||||||

|

使用valueFrom、configMapKeyRef、name、key指定要用的key

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# cat testpod.yaml

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Pod

|

||||||

|

metadata:

|

||||||

|

name: dapi-test-pod

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: test-container

|

||||||

|

image: daocloud.io/library/nginx

|

||||||

|

env:

|

||||||

|

- name: SPECIAL_LEVEL_KEY //这里是容器里设置的新变量的名字

|

||||||

|

valueFrom:

|

||||||

|

configMapKeyRef:

|

||||||

|

name: special-config //这里是来源于哪个configMap

|

||||||

|

key: special.how //configMap里的key

|

||||||

|

- name: SPECIAL_TYPE_KEY

|

||||||

|

valueFrom:

|

||||||

|

configMapKeyRef:

|

||||||

|

name: special-config

|

||||||

|

key: special.type

|

||||||

|

restartPolicy: Never

|

||||||

|

```

|

||||||

|

|

||||||

|

测试

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl exec -it dapi-test-pod /bin/bash

|

||||||

|

root@dapi-test-pod:/# echo $SPECIAL_TYPE_KEY

|

||||||

|

charm

|

||||||

|

```

|

||||||

|

|

||||||

|

通过envFrom、configMapRef、name使得configmap中的所有key/value对都自动变成环境变量

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Pod

|

||||||

|

metadata:

|

||||||

|

name: dapi-test-pod

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: test-container

|

||||||

|

image: daocloud.io/library/nginx

|

||||||

|

envFrom:

|

||||||

|

- configMapRef:

|

||||||

|

name: special-config

|

||||||

|

restartPolicy: Never

|

||||||

|

```

|

||||||

|

|

||||||

|

这样容器里的变量名称直接使用configMap里的key名

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl exec -it dapi-test-pod /bin/bash

|

||||||

|

root@dapi-test-pod:/# env

|

||||||

|

HOSTNAME=dapi-test-pod

|

||||||

|

NJS_VERSION=1.15.8.0.2.7-1~stretch

|

||||||

|

NGINX_VERSION=1.15.8-1~stretch

|

||||||

|

KUBERNETES_PORT_443_TCP_PROTO=tcp

|

||||||

|

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

|

||||||

|

KUBERNETES_PORT=tcp://10.96.0.1:443

|

||||||

|

PWD=/

|

||||||

|

special.how=very

|

||||||

|

HOME=/root

|

||||||

|

KUBERNETES_SERVICE_PORT_HTTPS=443

|

||||||

|

KUBERNETES_PORT_443_TCP_PORT=443

|

||||||

|

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

|

||||||

|

TERM=xterm

|

||||||

|

SHLVL=1

|

||||||

|

KUBERNETES_SERVICE_PORT=443

|

||||||

|

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

|

||||||

|

special.type=charm

|

||||||

|

KUBERNETES_SERVICE_HOST=10.96.0.1

|

||||||

|

```

|

||||||

|

|

||||||

|

作为volume挂载使用:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

apiVersion: apps/v1

|

||||||

|

kind: Deployment

|

||||||

|

metadata:

|

||||||

|

name: nginx-configmap

|

||||||

|

spec:

|

||||||

|

replicas: 1

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

app: nginx

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

app: nginx

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: nginx-configmap

|

||||||

|

image: daocloud.io/library/nginx:latest

|

||||||

|

ports:

|

||||||

|

- containerPort: 80

|

||||||

|

volumeMounts:

|

||||||

|

- name: config-volume3

|

||||||

|

mountPath: /tmp/config3

|

||||||

|

volumes:

|

||||||

|

- name: config-volume3

|

||||||

|

configMap:

|

||||||

|

name: test-config-3

|

||||||

|

```

|

||||||

|

|

||||||

|

进入容器中/tmp/config4查看

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master yaml]# kubectl exec -it nginx-configmap-7447bf77d6-svj2t /bin/bash

|

||||||

|

|

||||||

|

root@nginx-configmap-7447bf77d6-svj2t:/# ls /tmp/config4/

|

||||||

|

cache_host cache_port cache_prefix my.cnf

|

||||||

|

|

||||||

|

root@nginx-configmap-7447bf77d6-svj2t:/# cat /tmp/config4/cache_host

|

||||||

|

memcached-gcxt

|

||||||

|

|

||||||

|

可以看到,在config4文件夹下以每一个key为文件名value为值创建了多个文件。

|

||||||

|

```

|

||||||

|

|

||||||

|

假如不想以key名作为配置文件名可以引入items 字段,在其中逐个指定要用相对路径path替换的key:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

volumes:

|

||||||

|

- name: config-volume4

|

||||||

|

configMap:

|

||||||

|

name: test-config4

|

||||||

|

items:

|

||||||

|

- key: my.cnf //原来的key名

|

||||||

|

path: mysql-key

|

||||||

|

- key: cache_host //原来的key名

|

||||||

|

path: cache-host

|

||||||

|

```

|

||||||

|

|

||||||

|

注意:

|

||||||

|

|

||||||

|

删除configmap后原pod不受影响;然后再删除pod后,重启的pod的events会报找不到cofigmap的volume

|

||||||

|

|

||||||

|

pod起来后再通过kubectl edit configmap …修改configmap,过一会pod内部的配置也会刷新

|

||||||

|

|

||||||

|

在容器内部修改挂进去的配置文件后,过一会内容会再次被刷新为原始configmap内容

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

119

MD/kubernetes集群Dashboard部署.md

Normal file

119

MD/kubernetes集群Dashboard部署.md

Normal file

@ -0,0 +1,119 @@

|

|||||||

|

<h1><center>Kubernetes集群Dashboard部署</center></h1>

|

||||||

|

|

||||||

|

著作:行癫 <盗版必究>

|

||||||

|

|

||||||

|

------

|

||||||

|

|

||||||

|

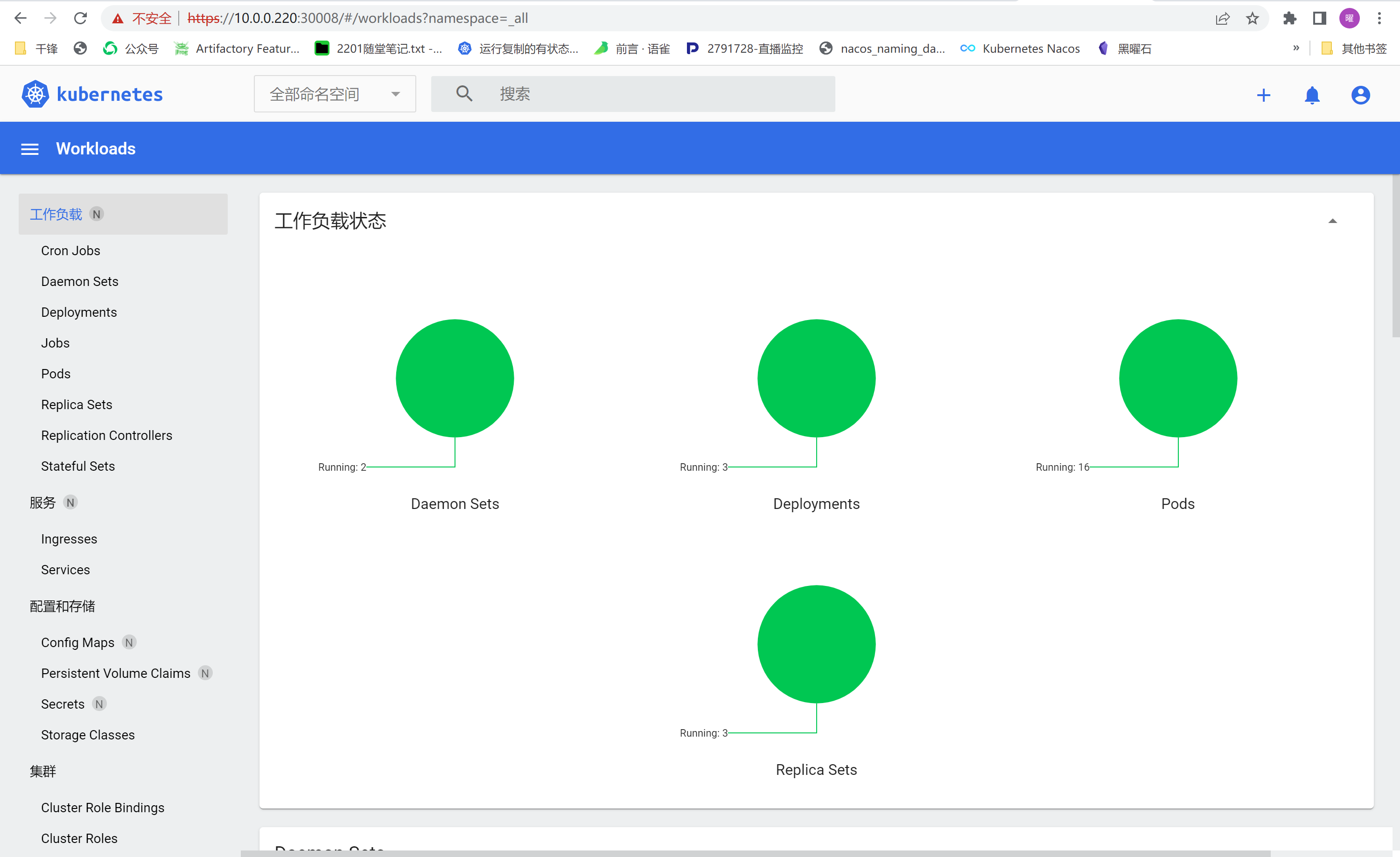

## 一:部署Dashboard

|

||||||

|

|

||||||

|

#### 1.kube-proxy 开启 ipvs

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@k8s-master ~]# kubectl get configmap kube-proxy -n kube-system -o yaml > kube-proxy-configmap.yaml

|

||||||

|

[root@k8s-master ~]# sed -i 's/mode: ""/mode: "ipvs"/' kube-proxy-configmap.yaml

|

||||||

|

[root@k8s-master ~]# kubectl apply -f kube-proxy-configmap.yaml

|

||||||

|

[root@k8s-master ~]# rm -f kube-proxy-configmap.yaml

|

||||||

|

[root@k8s-master ~]# kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.下载Dashboard安装脚本

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# wget http://www.xingdiancloud.cn:92/index.php/s/yer7cWtxesEit2R/download/recommended.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 3.创建证书

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@k8s-master ~]# mkdir dashboard-certs

|

||||||

|

[root@k8s-master ~]# cd dashboard-certs/

|

||||||

|

#创建命名空间

|

||||||

|

[root@k8s-master ~]# kubectl create namespace kubernetes-dashboard

|

||||||

|

# 创建私钥key文件

|

||||||

|

[root@k8s-master ~]# openssl genrsa -out dashboard.key 2048

|

||||||

|

#证书请求

|

||||||

|

[root@k8s-master ~]# openssl req -days 36000 -new -out dashboard.csr -key dashboard.key -subj '/CN=dashboard-cert'

|

||||||

|

#自签证书

|

||||||

|

[root@k8s-master ~]# openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

|

||||||

|

#创建kubernetes-dashboard-certs对象

|

||||||

|

[root@k8s-master ~]# kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 4.创建管理员

|

||||||

|

|

||||||

|

```shell

|

||||||

|

创建账户

|

||||||

|

[root@k8s-master ~]# vim dashboard-admin.yaml

|

||||||

|

apiVersion: v1

|

||||||

|

kind: ServiceAccount

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

name: dashboard-admin

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

#保存退出后执行

|

||||||

|

[root@k8s-master ~]# kubectl create -f dashboard-admin.yaml

|

||||||

|

为用户分配权限

|

||||||

|

[root@k8s-master ~]# vim dashboard-admin-bind-cluster-role.yaml

|

||||||

|

apiVersion: rbac.authorization.k8s.io/v1

|

||||||

|

kind: ClusterRoleBinding

|

||||||

|

metadata:

|

||||||

|

name: dashboard-admin-bind-cluster-role

|

||||||

|

labels:

|

||||||

|

k8s-app: kubernetes-dashboard

|

||||||

|

roleRef:

|

||||||

|

apiGroup: rbac.authorization.k8s.io

|

||||||

|

kind: ClusterRole

|

||||||

|

name: cluster-admin

|

||||||

|

subjects:

|

||||||

|

- kind: ServiceAccount

|

||||||

|

name: dashboard-admin

|

||||||

|

namespace: kubernetes-dashboard

|

||||||

|

#保存退出后执行

|

||||||

|

[root@k8s-master ~]# kubectl create -f dashboard-admin-bind-cluster-role.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 5.安装 Dashboard

|

||||||

|

|

||||||

|

```shell

|

||||||

|

#安装

|

||||||

|

[root@k8s-master ~]# kubectl create -f ~/recommended.yaml

|

||||||

|

|

||||||

|

#检查结果

|

||||||

|

[root@k8s-master ~]# kubectl get pods -A -o wide

|

||||||

|

|

||||||

|

[root@k8s-master ~]# kubectl get service -n kubernetes-dashboard -o wide

|

||||||

|

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

|

||||||

|

dashboard-metrics-scraper ClusterIP 10.1.186.219 <none> 8000/TCP 19m k8s-app=dashboard-metrics-scraper

|

||||||

|

kubernetes-dashboard NodePort 10.1.60.1 <none> 443:30008/TCP 19m k8s-app=kubernetes-dashboard

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 6.查看并复制token

|

||||||

|

|

||||||

|

```shell

|

||||||

|

[root@master ~]# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}')

|

||||||

|

Name: dashboard-admin-token-xlhzr

|

||||||

|

Namespace: kubernetes-dashboard

|

||||||

|

Labels: <none>

|

||||||

|

Annotations: kubernetes.io/service-account.name: dashboard-admin

|

||||||

|

kubernetes.io/service-account.uid: a38e8ce3-848e-4d94-abcf-4d824deeb697

|

||||||

|

|

||||||

|

Type: kubernetes.io/service-account-token

|

||||||

|

|

||||||

|

Data

|

||||||

|

====

|

||||||

|

ca.crt: 1099 bytes

|

||||||

|

namespace: 20 bytes

|

||||||

|

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InFsRE1GQi1KQnZsZHpUOGZ4WGc1dlU1UHg3UGVrcC02TUNyYmZWcHhFZ3MifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4teGxoenIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYTM4ZThjZTMtODQ4ZS00ZDk0LWFiY2YtNGQ4MjRkZWViNjk3Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.anEX2MBlIo0lKQCGOsl3oZKBQkYujg6twLoO8hbWLAVp3xveAgpt6nW-_FrkG0yy9tIyXa6lpvu-c99ueB4KvKrIF0vJggWT3fU73u75iIwTbqDSghWy_BRFjt9NYuUFL4Mu-sPqra0ELgxYIGSEVuQwmZ8qOFjrQQQ2pKjxt8SsUHGLW-9FgmSgZTHPvZKFnU2V23BC2n_vowff63PF6kfnj1bNzV3Z1YCzgZOdy3jKM6sNKSI3dbcHiJpv5p7XF18qvuSZMJ9tMU4vSwzkQ_OLxsdNYwwD_YfRhua6f0kgWO23Z0lBTRLInejssdIQ31yewg9Eoqv4DhN1jZqhOw

|

||||||

|

```

|

||||||

|

|

||||||

|

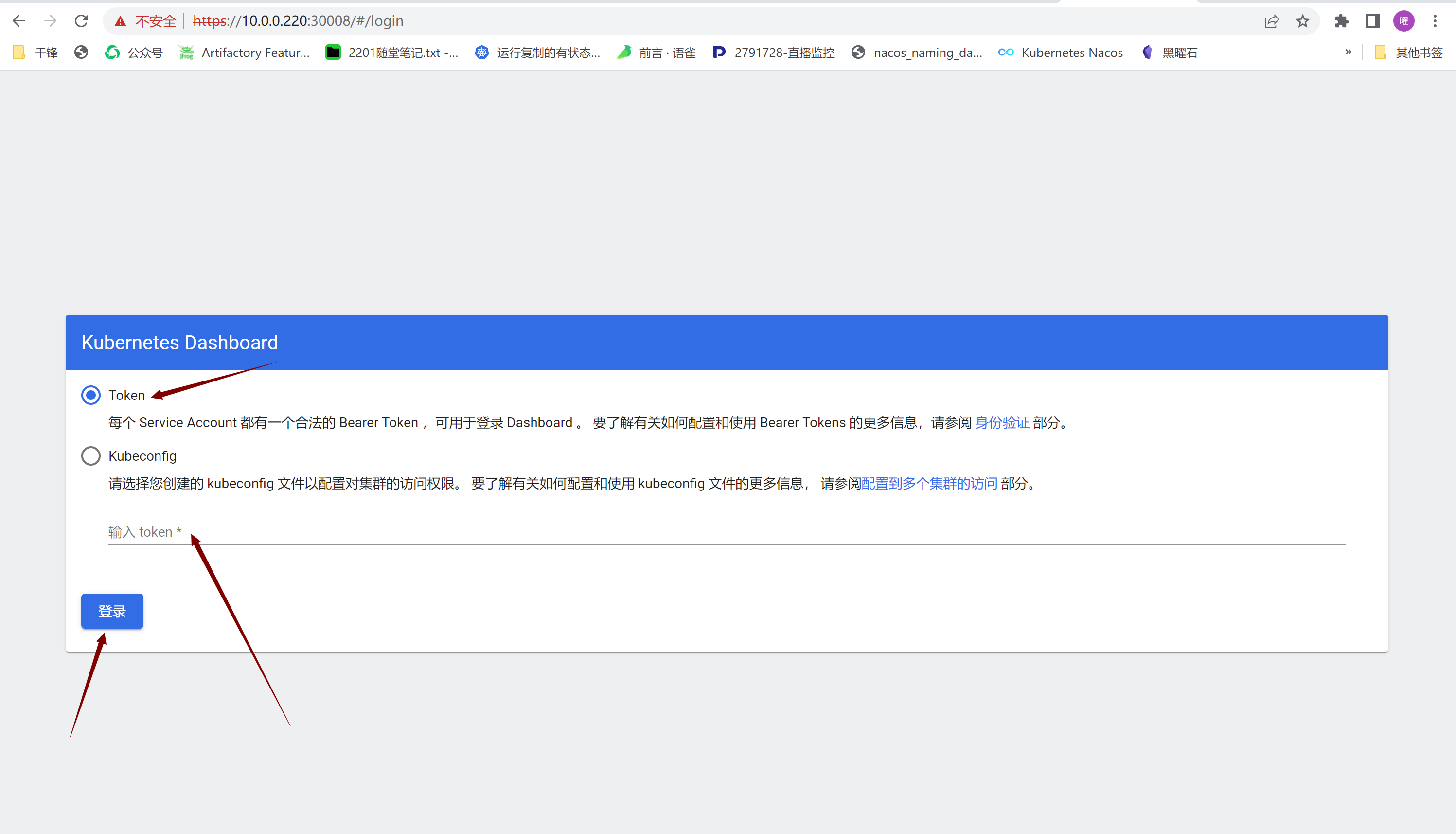

#### 7.浏览器访问

|

||||||

|

|

||||||

|

```shell

|

||||||

|

https://10.0.0.220:30008

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Loading…

Reference in New Issue

Block a user