Kubernetes使用StorageClass动态供应PV

作者:行癫(盗版必究)

------

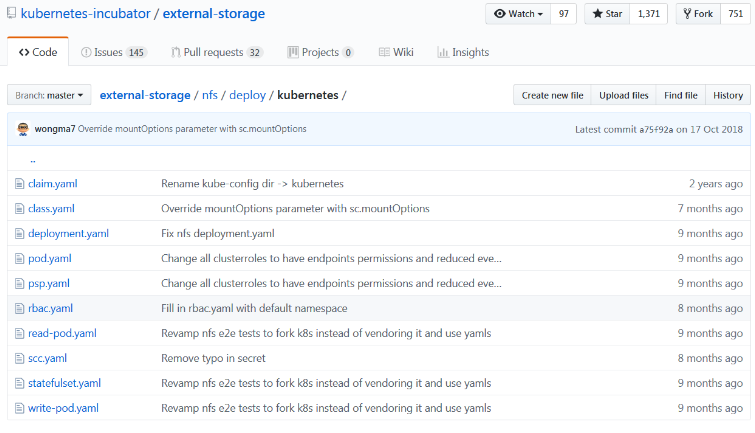

## 一:安装 NFS 插件

- GitHub地址:https://github.com/kubernetes-incubator/external-storage/tree/master/nfs/deploy/kubernetes

#### 1、配置名称空间

```shell

[root@k8s-master StatefulSet]# ls

01-namespace.yaml 03-nfs-provisioner.yaml 05-test-claim.yaml 07-nginx-statefulset.yaml

02-rbac.yaml 04-nfs-StorageClass.yaml 06-test-pod.yaml

```

```shell

[root@k8s-master StatefulSet]# cat<>01-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: dev

EOF

```

- 确认信息

```shell

[root@k8s-master StatefulSet]# kubectl get ns

NAME STATUS AGE

default Active 12d

dev Active 60m

kube-node-lease Active 12d

kube-public Active 12d

kube-system Active 12d

kubernetes-dashboard Active 12d

```

#### 2、配置授权

```shell

[root@k8s-master StatefulSet]# cat<>02-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: dev

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: managed-run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: dev

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: dev

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: dev

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: dev

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

EOF

```

- 部署 rbac.yaml

```shell

[root@k8s-master StatefulSet]# kubectl create -f rbac.yaml

```

- 确认配置

```shell

[root@k8s-master StatefulSet]# kubectl get -n dev clusterrole |grep nfs

nfs-client-provisioner-runner 2023-12-13T06:11:25Z

[root@k8s-master StatefulSet]# kubectl get -n dev clusterrolebindings.rbac.authorization.k8s.io |grep nfs

managed-run-nfs-client-provisioner ClusterRole/nfs-client-provisioner-runner 57m

[root@k8s-master StatefulSet]# kubectl get -n dev role

NAME CREATED AT

leader-locking-nfs-client-provisioner 2023-12-13T06:06:20Z

[root@k8s-master StatefulSet]# kubectl get -n dev rolebindings.rbac.authorization.k8s.io

NAME ROLE AGE

leader-locking-nfs-client-provisioner Role/leader-locking-nfs-client-provisioner 58m

```

#### 3、创建nfs provisioner

```shell

[root@k8s-master StatefulSet]# cat<>03-nfs-provisioner.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: dev #与RBAC文件中的namespace保持一致

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#image: quay.io/external_storage/nfs-client-provisioner:latest

image: easzlab/nfs-subdir-external-provisioner:v4.0.1

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: provisioner-nfs-storage #provisioner名称,请确保该名称与 nfs-StorageClass.yaml文件中的provisioner名称保持一致

- name: NFS_SERVER

value: master01 #NFS Server IP地址

- name: NFS_PATH

value: /data/volumes/v1 #NFS挂载卷

volumes:

- name: nfs-client-root

nfs:

server: master01 #NFS Server IP地址

path: /data/volumes/v1 #NFS 挂载卷

EOF

```

- 部署deployment-nfs.yaml

```shell

[root@k8s-master StatefulSet]# kubectl apply -f deployment-nfs.yaml

```

- 查看创建的POD

```shell

[root@k8s-master StatefulSet]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-6df46fcf96-qnh64 1/1 Running 0 53m

```

#### 4、创建-StorageClass

```shell

[root@k8s-master StatefulSet]# cat <>04-nfs-StorageClass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

namespace: dev

provisioner: provisioner-nfs-storage #这里的名称要和provisioner配置文件中的环境变量PROVISIONER_NAME保持一致

parameters:

archiveOnDelete: "false"

EOF

```

- 部署storageclass-nfs.yaml

```shell

[root@k8s-master StatefulSet]# kubectl apply -f storageclass-nfs.yaml

```

- 查看创建的StorageClass

```shell

[root@k8s-master StatefulSet]# kubectl get storageclasses.storage.k8s.io -n dev

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage provisioner-nfs-storage Delete Immediate false 3h1m

```

#### 5、创建测试pvc

```shell

[root@k8s-master StatefulSet]# cat <>05-test-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

namespace: dev

## annotations:

## volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" # 后期k8s不再支持这个注解,通过声明storageClassName的方式代替

spec:

accessModes:

- ReadWriteMany

storageClassName: managed-nfs-storage #与nfs-StorageClass.yaml metadata.name保持一致

resources:

requests:

storage: 5Mi

EOF

```

- 创建测试 PVC

```bash

[root@k8s-master StatefulSet]# kubectl create -f test-claim.yaml

```

- 查看创建的PVC状态为Bound

```shell

[root@k8s-master StatefulSet]# kubectl get -n dev pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Bound pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3 1Mi RWX managed-nfs-storage 34m

```

- 查看自动创建的PV

```shell

[root@k8s-master StatefulSet]# kubectl get -n dev pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3 1Mi RWX Delete Bound default/test-claim managed-nfs-storage 34m

```

- 进入到NFS的export目录查看对应volume name的目录已经创建出来。

- 其中volume的名字是namespace,PVC name以及uuid的组合:

```shell

[root@k8s-master StatefulSet]# ls /data/volumes/v1

total 0

drwxrwxrwx 2 root root 21 Jan 29 12:03 default-test-claim-pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3

```

#### 6、创建测试pod

- 指定pod使用刚创建的PVC:test-claim,

- 完成之后,如果attach到pod中执行一些文件的读写操作,就可以确定pod的/mnt已经使用了NFS的存储服务了。

```shell

[root@k8s-master StatefulSet]# cat<>06-test-pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: test-pod

namespace: dev

spec:

containers:

- name: test-pod

image: busybox:1.24

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1" #创建一个SUCCESS文件后退出

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim #与PVC名称保持一致

EOF

```

- 执行yaml文件

```bash

[root@k8s-master StatefulSet]# kubectl apply -f test-pod.yaml

```

- 查看创建的测试POD

```swift

[root@k8s-master StatefulSet]# kubectl get -n dev pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-75bf876d88-578lg 1/1 Running 0 51m 10.244.2.131 k8s-node2

test-pod 0/1 Completed 0 41m 10.244.1.

```

- 在NFS服务器上的共享目录下的卷子目录中检查创建的NFS PV卷下是否有"SUCCESS" 文件。

```shell

[root@k8s-master StatefulSet]# cd /data/volumes/v1

[root@k8s-master v1]# ll

total 0

drwxrwxrwx 2 root root 21 Jan 29 12:03 default-test-claim-pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3

[root@k8s-master v1]# cd default-test-claim-pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3/

[root@k8s-master default-test-claim-pvc-a17d9fd5-237a-11e9-a2b5-000c291c25f3]# ll

total 0

-rw-r--r-- 1 root root 0 Jan 29 12:03 SUCCESS

```

#### 7、清理测试环境

- 删除测试POD

```bash

[root@k8s-master StatefulSet]# kubectl delete -f test-pod.yaml

```

- 删除测试PVC

```bash

[root@k8s-master StatefulSet]# kubectl delete -f test-claim.yaml

```

- 在NFS服务器上的共享目录下查看NFS的PV卷已经被删除。

#### 8、创建一个nginx动态获取PV

```yaml

[root@k8s-master StatefulSet]# cat <>nginx-statefulset.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-headless

namespace: dev

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None #注意此处的值,None表示无头服务

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

namespace: dev

spec:

selector:

matchLabels:

app: nginx

serviceName: "nginx-headless"

replicas: 3 #两个副本

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates: # 创建 pvc 模板

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 1Gi

EOF

```

- 启动后看到以下信息:

```shell

[root@k8s-master StatefulSet]# kubectl get -n dev pods,pv,pvc

NAME READY STATUS RESTARTS AGE

pod/nfs-client-provisioner-5778d56949-ltjbt 1/1 Running 0 42m

pod/test-pod 0/1 Completed 0 36m

pod/web-0 1/1 Running 0 2m23s

pod/web-1 1/1 Running 0 2m6s

pod/web-2 1/1 Running 0 104s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-1d54bb5b-9c12-41d5-9295-3d827a20bfa2 1Gi RWO Delete Bound default/www-web-2 managed-nfs-storage 104s

persistentvolume/pvc-8cc0ed15-1458-4384-8792-5d4fd65dca66 1Gi RWO Delete Bound default/www-web-0 managed-nfs-storage 39m

persistentvolume/pvc-c924a2aa-f844-4d52-96c9-32769eb3f96f 1Mi RWX Delete Bound default/test-claim managed-nfs-storage 38m

persistentvolume/pvc-e30333d7-4aed-4700-b381-91a5555ed59f 1Gi RWO Delete Bound default/www-web-1 managed-nfs-storage 2m6s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/test-claim Bound pvc-c924a2aa-f844-4d52-96c9-32769eb3f96f 1Mi RWX managed-nfs-storage 38m

persistentvolumeclaim/www-web-0 Bound pvc-8cc0ed15-1458-4384-8792-5d4fd65dca66 1Gi RWO managed-nfs-storage 109m

persistentvolumeclaim/www-web-1 Bound pvc-e30333d7-4aed-4700-b381-91a5555ed59f 1Gi RWO managed-nfs-storage 2m6s

persistentvolumeclaim/www-web-2 Bound pvc-1d54bb5b-9c12-41d5-9295-3d827a20bfa2 1Gi RWO managed-nfs-storage 104s

```

- nfs服务器上也会看到自动生成3个挂载目录,当pod删除了数据还会存在。

```shell

[root@k8s-master StatefulSet]# ll /data/volumes/v1/

total 4

drwxrwxrwx 2 root root 6 Aug 16 18:21 default-nginx-web-0-pvc-ea22de1c-fa33-4f82-802c-f04fe3630007

drwxrwxrwx 2 root root 21 Aug 16 18:25 default-test-claim-pvc-c924a2aa-f844-4d52-96c9-32769eb3f96f

drwxrwxrwx 2 root root 6 Aug 16 18:21 default-www-web-0-pvc-8cc0ed15-1458-4384-8792-5d4fd65dca66

drwxrwxrwx 2 root root 6 Aug 16 18:59 default-www-web-1-pvc-e30333d7-4aed-4700-b381-91a5555ed59f

drwxrwxrwx 2 root root 6 Aug 16 18:59 default-www-web-2-pvc-1d54bb5b-9c12-41d5-9295-3d827a20bfa2

```