9.2 KiB

AI大模型Ollama结合Open-webui

作者:行癫(盗版必究)

一:认识 Ollama

1.什么是Ollama

Ollama是一个开源的 LLM(大型语言模型)服务工具,用于简化在本地运行大语言模型,降低使用大语言模型的门槛,使得大模型的开发者、研究人员和爱好者能够在本地环境快速实验、管理和部署最新大语言模型

2.官方网址

官方地址:https://ollama.com/

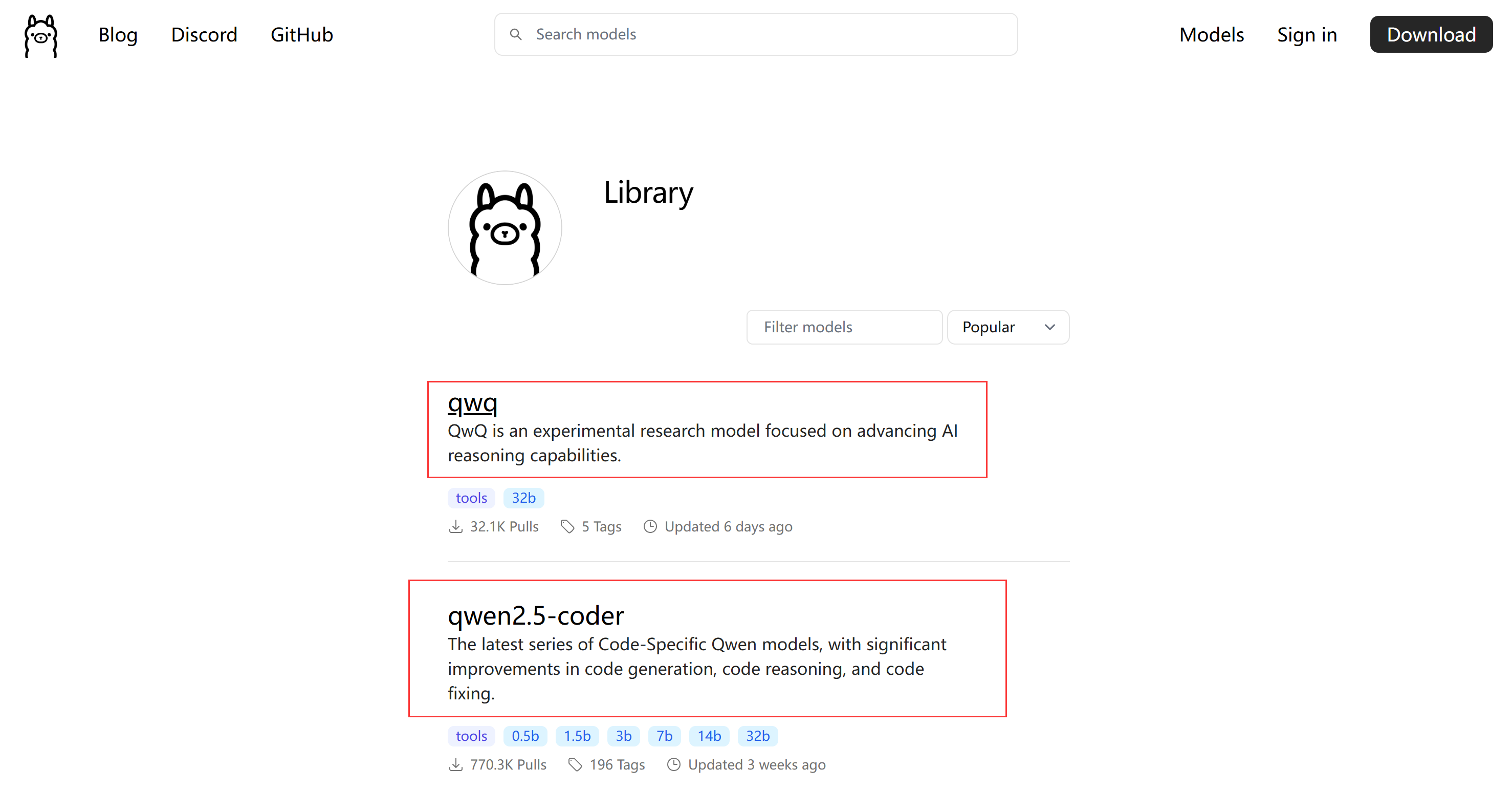

Ollama目前支持以下大语言模型:https://ollama.com/library

Ollama下载地址:https://ollama.com/download/ollama-linux-amd64.tgz

3.注意事项

qwen、qwq、Llama等都是大语言模型

Ollama是大语言模型(不限于Llama模型)便捷的管理和运维工具

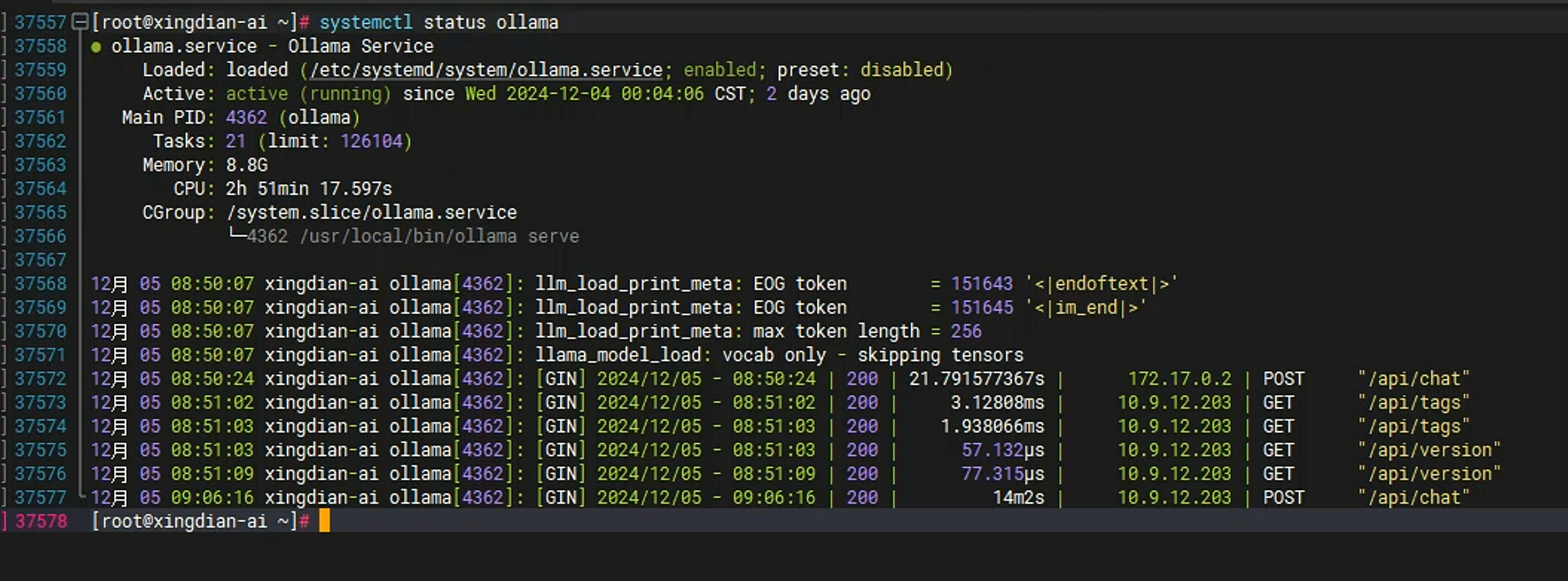

二:安装部署Ollama

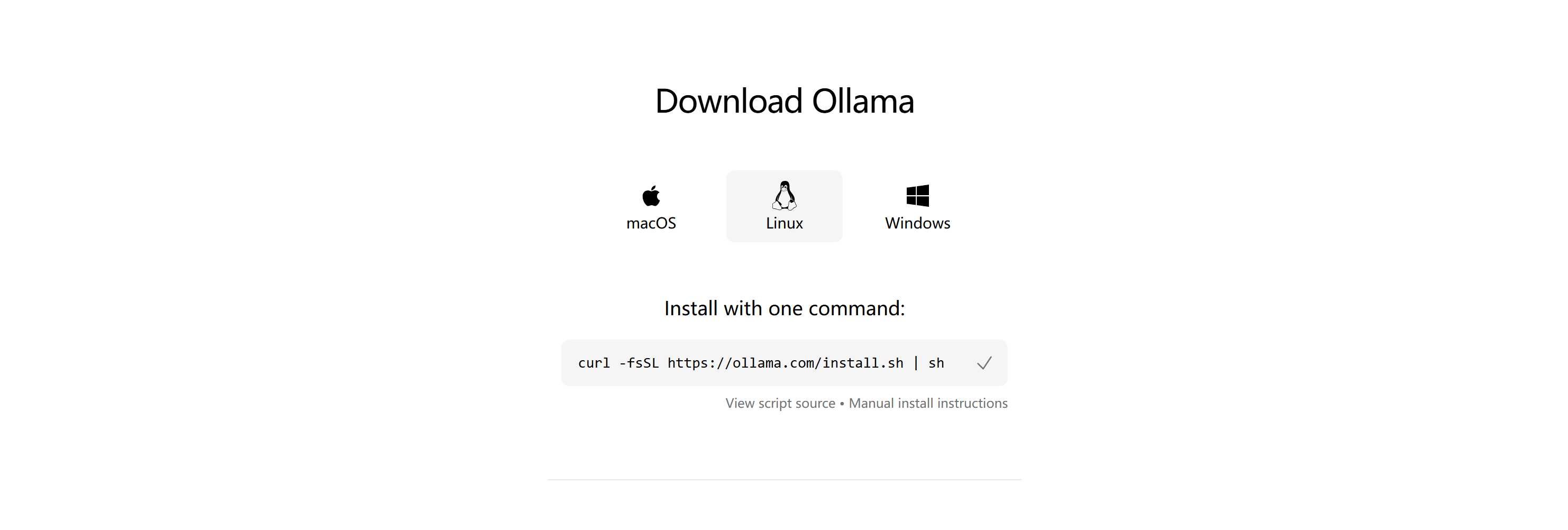

1.官方脚本安装

注意:服务器需要可以访问github等外网

curl -fsSL https://ollama.com/install.sh | sh

2.二进制安装

参考网址:https://github.com/ollama/ollama/blob/main/docs/linux.md

获取二进制安装包

curl -L https://ollama.com/download/ollama-linux-amd64.tgz -o ollama-linux-amd64.tgz

解压及安装

sudo tar -C /usr -xzf ollama-linux-amd64.tgz

创建管理用户和组

sudo useradd -r -s /bin/false -U -m -d /usr/share/ollama ollama

sudo usermod -a -G ollama $(whoami)

配置启动管理文件/etc/systemd/system/ollama.service

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=$PATH"

[Install]

WantedBy=default.target

启动

sudo systemctl daemon-reload

sudo systemctl enable ollama

注意:如果手动启动直接执行以下命令

ollama serve

3.容器安装

CPU Only:

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

GPU Install:

参考网址:https://github.com/ollama/ollama/blob/main/docs/docker.md

4.Kubernetes安装

apiVersion: apps/v1

kind: Deployment

metadata:

annotations: {}

labels:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: ollama

name: ollama

namespace: xingdian-ai

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: ollama

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: ollama

spec:

containers:

- image: >-

swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/ollama/ollama:latest

imagePullPolicy: IfNotPresent

name: ollama

ports:

- containerPort: 11434

protocol: TCP

volumeMounts:

- mountPath: /root/.ollama

name: volume-28ipz

restartPolicy: Always

volumes:

- name: volume-28ipz

nfs:

path: /data/ollama

server: 10.9.12.250

---

apiVersion: v1

kind: Service

metadata:

annotations: {}

labels:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: ollama

name: ollama

namespace: xingdian-ai

spec:

clusterIP: 10.109.25.34

clusterIPs:

- 10.109.25.34

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: 3scykr

port: 11434

protocol: TCP

targetPort: 11434

selector:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: ollama

sessionAffinity: None

type: ClusterIP

5.基本使用

[root@xingdian-ai ~]# ollama -h

Large language model runner

Usage:

ollama [flags]

ollama [command]

Available Commands:

serve Start ollama

create Create a model from a Modelfile

show Show information for a model

run Run a model

stop Stop a running model

pull Pull a model from a registry

push Push a model to a registry

list List models

ps List running models

cp Copy a model

rm Remove a model

help Help about any command

Flags:

-h, --help help for ollama

-v, --version Show version information

Use "ollama [command] --help" for more information about a command.

获取大语言模型

[root@xingdian-ai ~]# ollama pull gemma2:2b

注意:gemma是谷歌的大语言模型

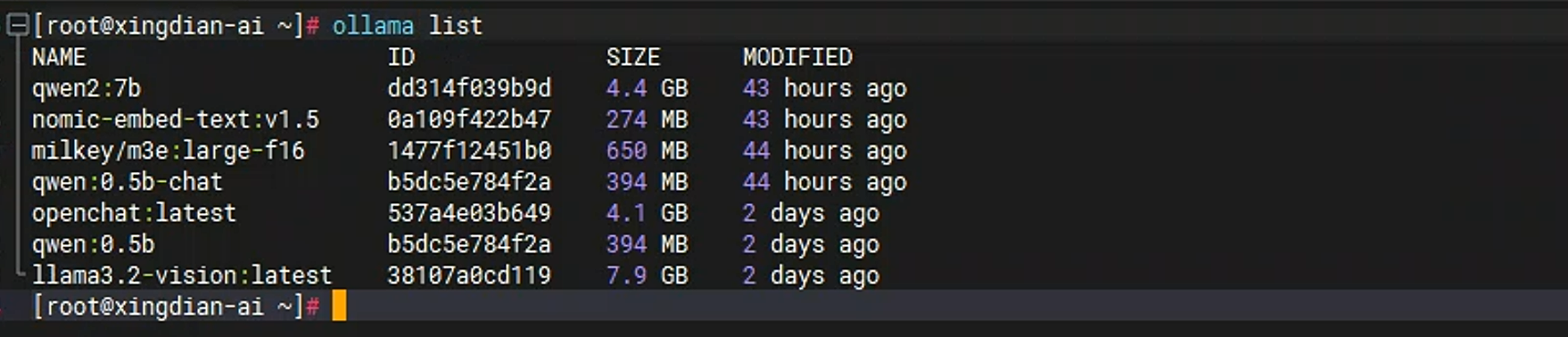

查看已有的发语言模型

[root@xingdian-ai ~]# ollama list

删除大语言模型

[root@xingdian-ai ~]# ollama list qwen2:7b

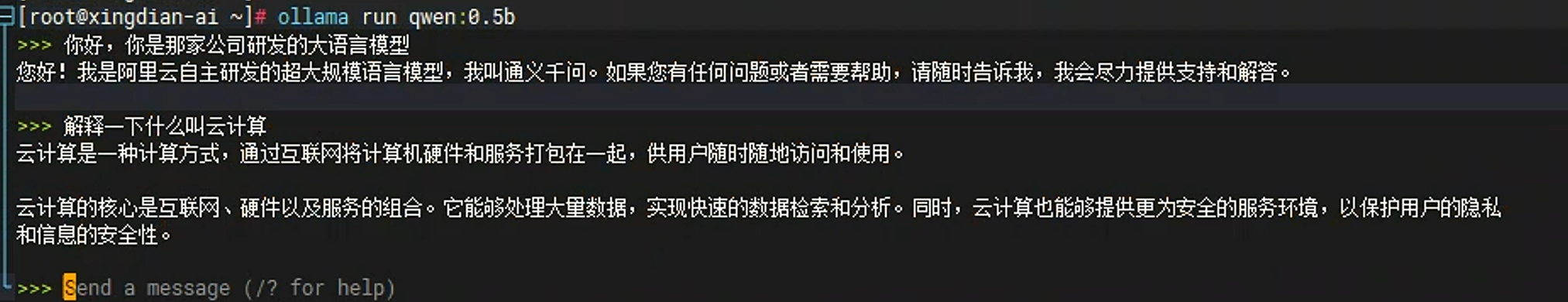

运行大语言模型

[root@xingdian-ai ~]# ollama run qwen:0.5b

三:认识 Open-webui

1.什么是Open-webui

Open-WebUI 常用于给用户提供一个图形界面,通过它,用户可以方便地与机器学习模型进行交互

2.官方地址

官方地址:https://github.com/open-webui/open-webui

四:安装部署和使用

1.容器安装方式

docker run -d -p 3000:8080 -e OLLAMA_BASE_URL=https://example.com -e HF_ENDPOINT=https://hf-mirror.com -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

国内镜像地址:swr.cn-north-4.myhuaweicloud.com/ddn-k8s/ghcr.io/open-webui/open-webui:main

OLLAMA_BASE_URL:指定Ollama地址

由于国内网络无法直接访问 huggingface , 我们需要更改为国内能访问的域名 hf-mirror.com

报错:

OSError: We couldn't connect to 'https://huggingface.co' to load this file, couldn't find it in the cached files and it looks like sentence-transformers/all-MiniLM-L6-v2 is not the path to a directory containing a file named config.json.

Checkout your internet connection or see how to run the library in offline mode at 'https://huggingface.co/docs/transformers/installation#offline-mode'.

No WEBUI_SECRET_KEY provided

解决方法: 使用镜像站 -e HF_ENDPOINT=https://hf-mirror.com

2.kubernetes方式

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: ai

name: ai

namespace: xingdian-ai

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: ai

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: ai

spec:

containers:

- env:

- name: OLLAMA_BASE_URL

value: 'http://10.9.12.10:11434'

- name: HF_ENDPOINT

value: 'https://hf-mirror.com'

image: '10.9.12.201/ollama/open-webui:main'

imagePullPolicy: Always

name: ai

ports:

- containerPort: 8080

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

restartPolicy: Always

terminationGracePeriodSeconds: 30

---

apiVersion: v1

kind: Service

metadata:

annotations: {}

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: ai

name: ai

namespace: xingdian-ai

spec:

clusterIP: 10.99.5.168

clusterIPs:

- 10.99.5.168

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: cnr2rn

port: 8080

protocol: TCP

targetPort: 8080

selector:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: ai

sessionAffinity: None

type: ClusterIP

3.使用kong ingress 进行引流

创建upstream svc地址为:xingdian-ai.ai.svc:8080

创建services

创建route

配置DNS解析

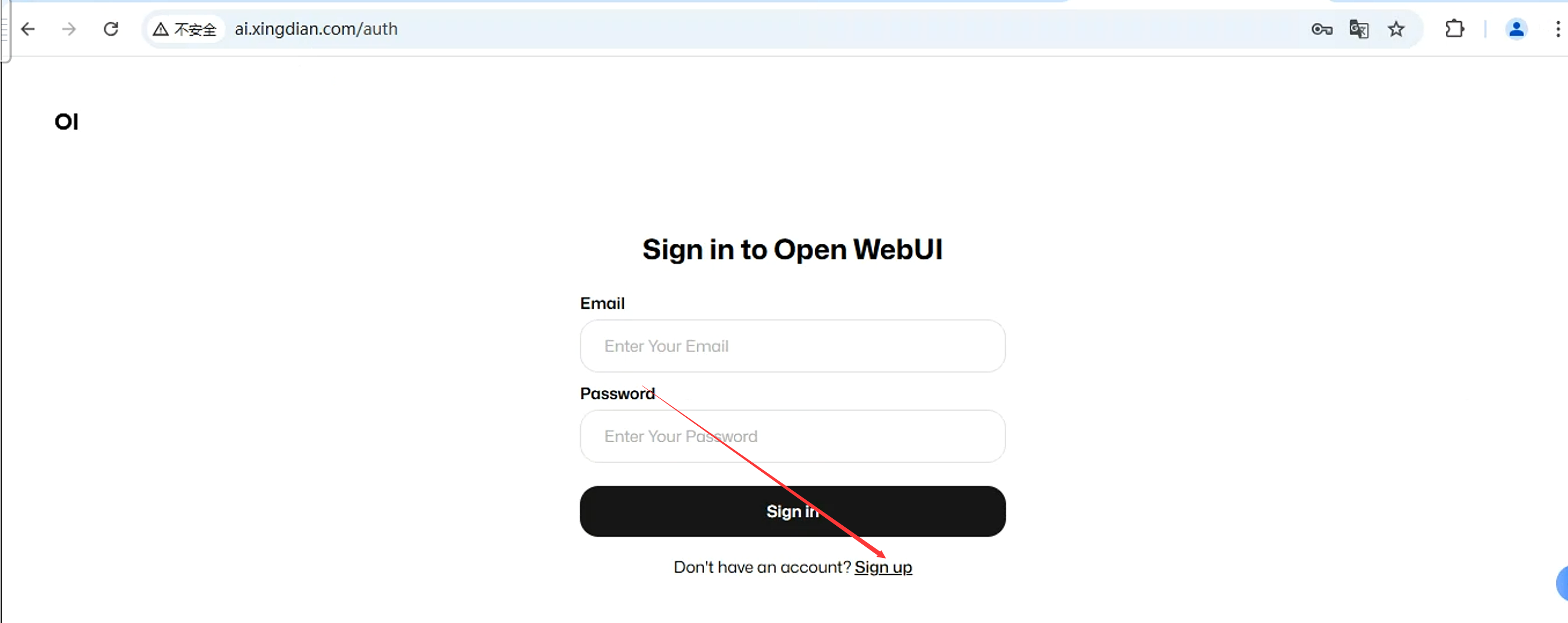

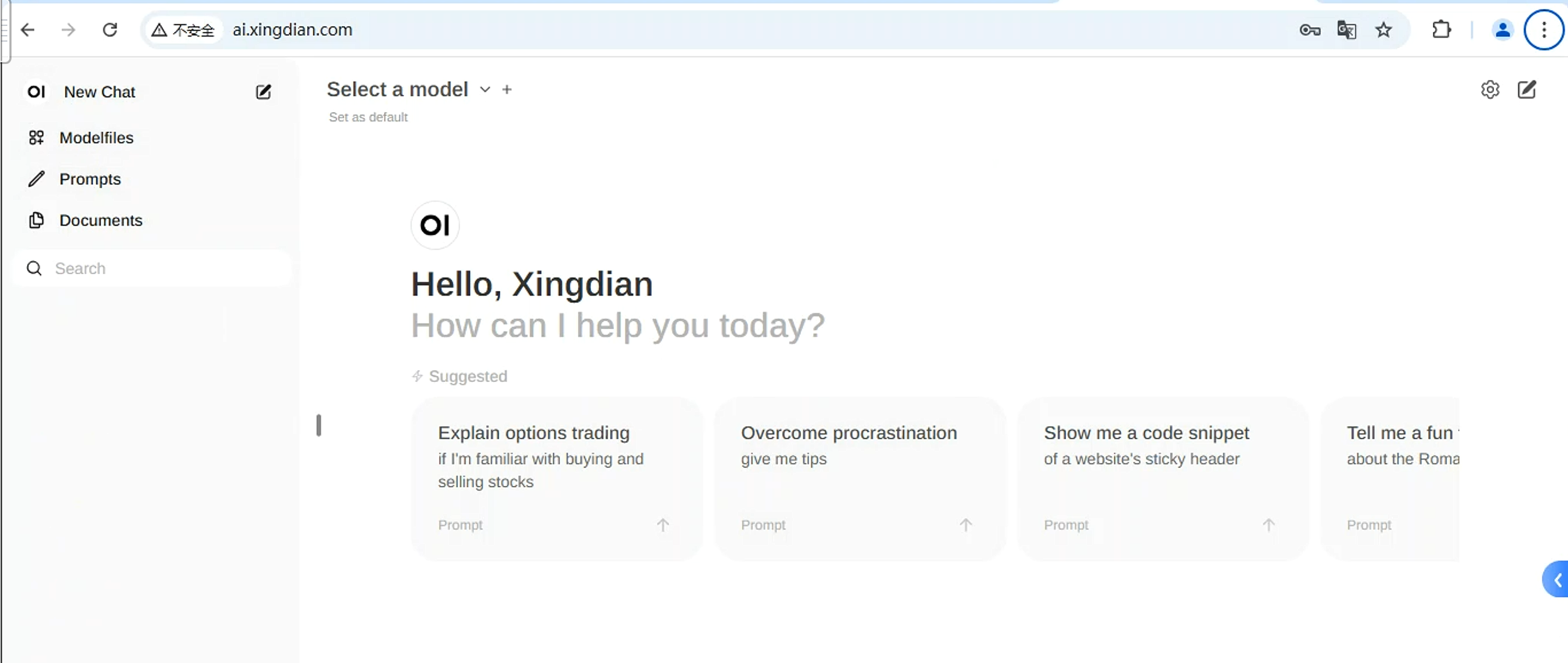

4.浏览器访问

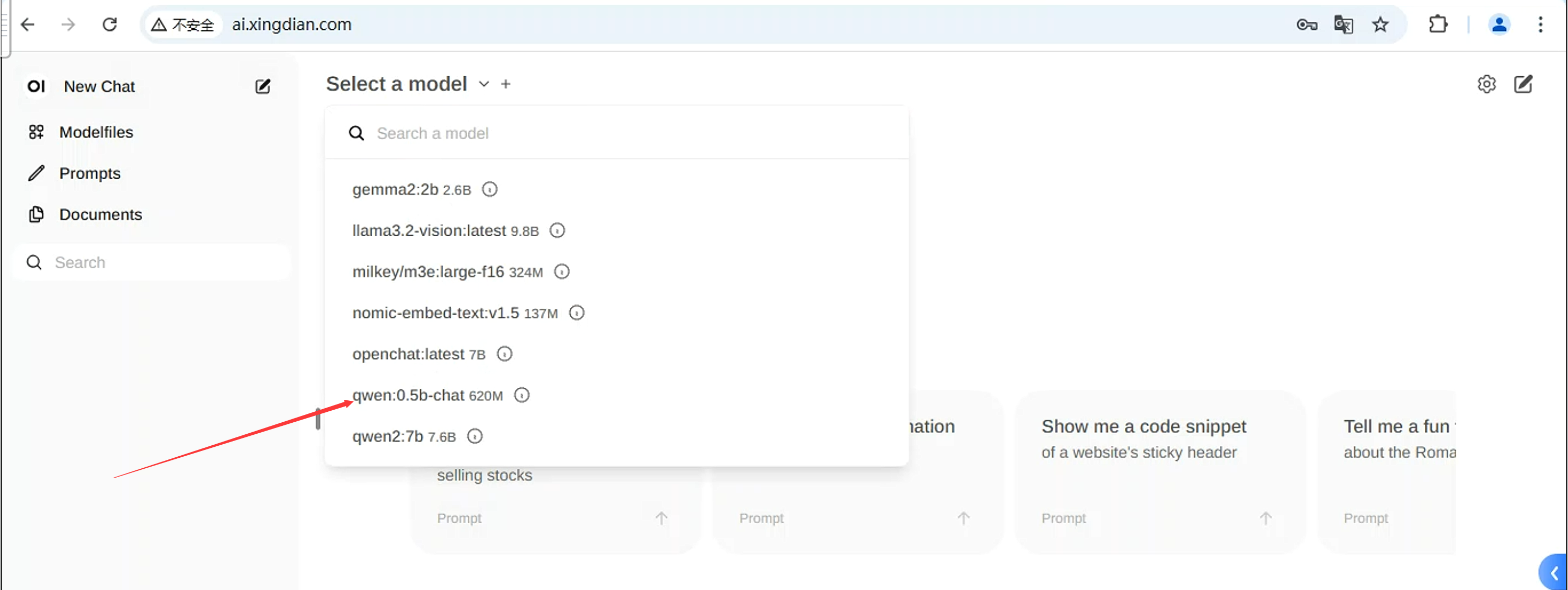

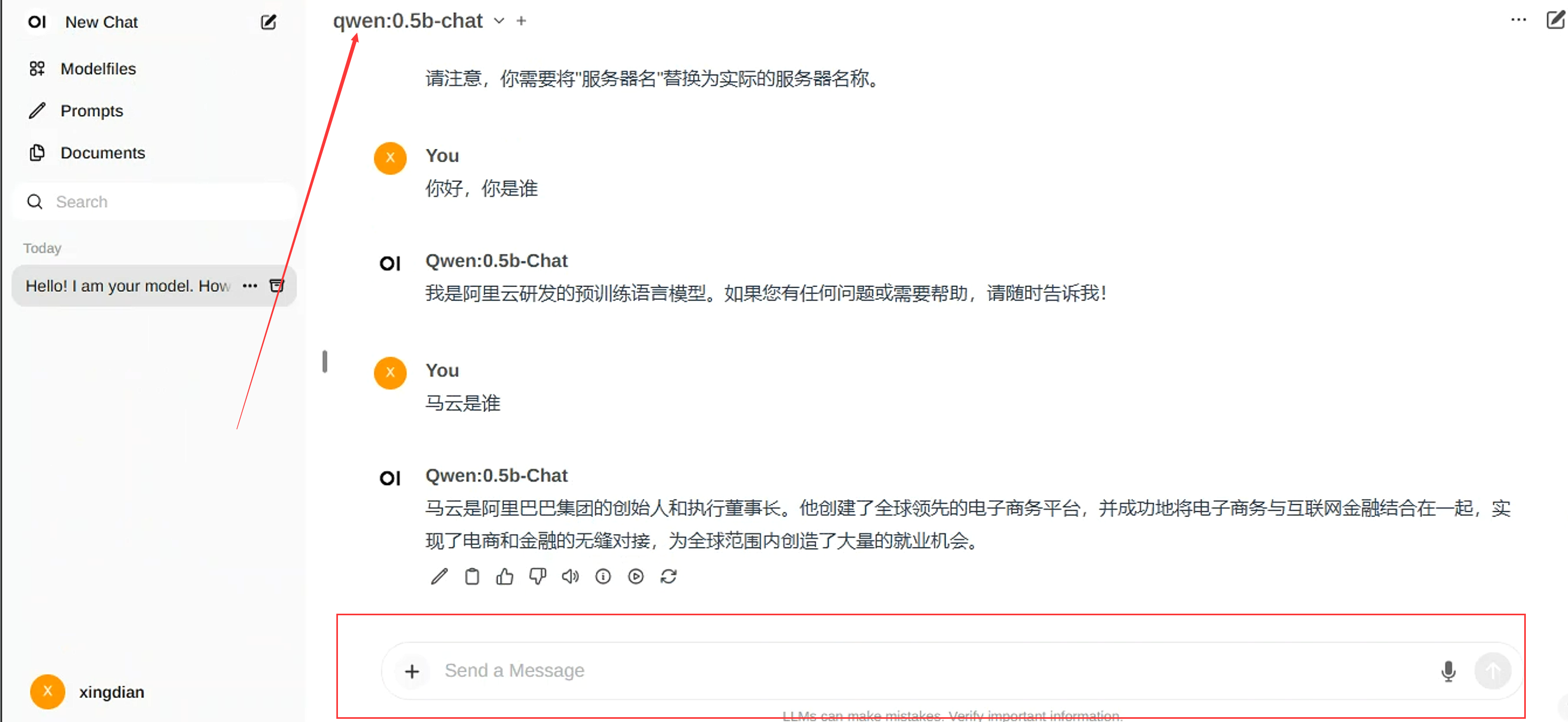

事先在ollama上获取大模型,我们一qwen:0.5b为例,其他的大模型占用资源较多